Tech Project: AI miniPC (Minisforum X1 Pro) 1.0

For many people, tech products are on a 3-4 year lifecycle, assuming that nothing catastrophic occurs, like the COVID and cryptocurrency demand spikes.

Four years ago, I got a Lenovo Legion laptop with Ryzen 7 5800H and 6600M to replace a Dell G5 AMD laptop that failed right after the one year warranty expired. The Lenovo Legion was and is still pretty capable at the lighter code compile and graphics workloads I find myself doing, but it has three major constraints:

- Limited cooling due to the laptop form factor.

- No real ability to do AI workloads.

- Limited VRAM.

On the other hand, I have a mid-tower desktop PC containing a Ryzen 7 7800X3D and a Radeon RX 7900 XTX. This is more than capable of handling AI workloads and has plenty of cooling. There are two major constraints on this system:

- It uses a lot of energy.

- It's in a separate room.

Even with a NAS to facilitate file transfers and the mindset benefits of two separate workspaces, it's massively inconvenient to have to work in two locations. The constant back and forth eats up time, especially in situations where a workload on the gaming PC takes a while. And for text based AI work, the RX 7900 XTX is massive overkill, providing fantastic performance with a 24GB VRAM buffer, but at a high power draw for a trivial task.

When I heard about Strix Halo via tech news and leak channel Moore's Law is Dead, the idea of a mobile workstation with a lot of RAM was appealing. Unfortunately, the reality is that the most future-proof/high longevity configurations - the ones with the most RAM - cost $2000. And $2000 is a lot to invest in any system.

So I needed to find an alternative.

Table of Contents

The Least Worst of All Worlds

Let's start with what I chose and work back to the why.

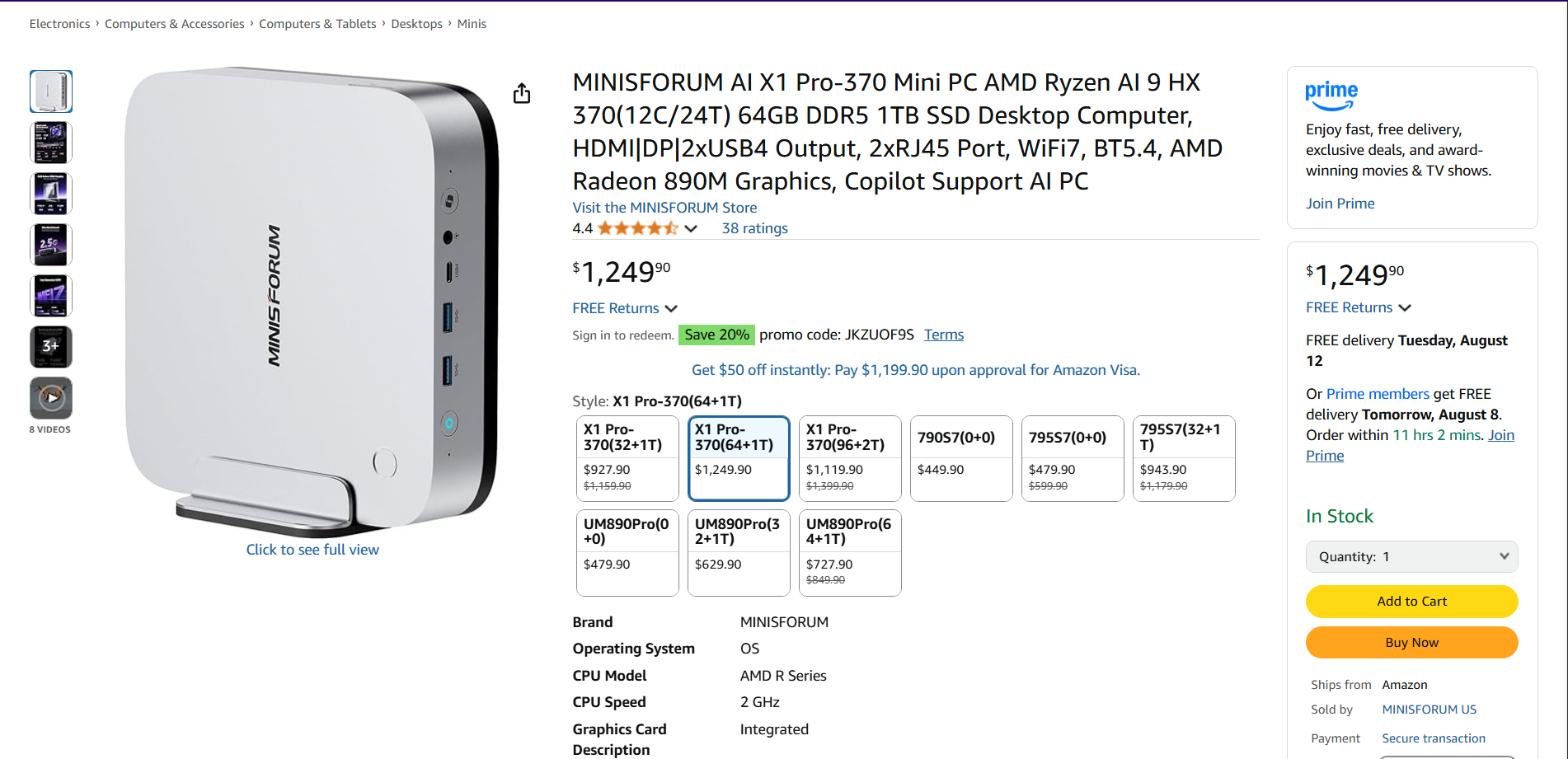

The MINISFORUM AI X1 Pro-370 Mini PC (hereafter the X1 Pro) is a Strix Point based miniPC. This is the second tier of AMD's AI focused APUs, which combine a powerful CPU with a GPU on the same chip. It predates the Strix Halo line by several months, so it has less impressive specifications overall.

The feature set in the product blurb is as follows:

【Leading AI Mini PC】MINISFORUM AI X1 Pro-370 Mini PC comes with AMD Ryzen AI 9 HX 370 processor, which uses AMD's latest generation Zen 5 architecture. It has 12 Cores and 24 Threads, the boost clock is up to 5.1GHz. The overall processor performance is up to 80 TOPS, and the NPU performance reaches up to 50 TOPS. AMD Ryzen AI enables improved productivity, advanced collaboration, and improved efficiency.

【AMD Radeon 890M Graphics】The X1 Pro Micro Computer equipped with AMD Radeon 890M Graphics which built on the new generation of RDNA 3.5 architecture AMD graphics, it brings ultra-high frame rate experiences and advanced content creation features anywhere and delivers staggering performance. It can handle all your computing and multimedia tasks efficiently.

【Support Copilot】This Mini PC supports Copilot. Copilot is an AI companion that works anywhere and intelligently adapts to your needs, helps you inspire writing inspiration and sumarize long articles, it also can helps you work prodctive, increase your creativity and stay connected to the people and things in your life.

【Dual 2.5G Lan Port and Wi-Fi 7 Support】It comes with Two 2.5G Lan Ports for wired connection and and Wi-Fi 7 / BT5.4 for wireless connection, which increased the network speed greatly and expand its functions and improved performance of computer to a large extent and allows you to use more networks such as software routers (OpenWRT / DD-WRT / Tomato etc.), firewalls, NAT, network isolation etc.

【OCulink Support】This mini pc was equipped with a OCulink port. OCuLink is a standard for externally connecting PCI Express, the speed is PCIe4.0 x4=64G. With this port, you could install external GPU with faster support speeds compared to Thunderbolt 4 and USB4. Note: *Non-hot-swappable, OCulink requires one M.2 2280 PCIe4.0 SSⅮslot*.

【Four Video Outputs】 This X1 Pro Computer is Equipped with 1x HDMI, 1x DP and 2x USB4 Outputs, you can connect 4 displays at the same time. Increase work efficiency by expanding multiple workspaces. It is used in fields that require high-performance computing and graphics processing, including digital signage and securities trading, as well as work that uses CAD, such as engineering design, scientific calculations, animation production, and post-production for movies and television. These are typically used by individual users or experts in their field.

【Expandable Storage】 This Micro Computer has pre-installed 64GB DDR5 RAM(Dual SO-DIMM Slot, up to 96GB) and 1TB M.2 2280 PCIe4.0 SSD. You could expand the SSD up to 4TB. There two more PCIe4.0 SSD slots available for expanding the storage, total up to 12TB. Without worrying about lack of capacity, you can run software smoothly, watch and storage large-scale movies, photos without any stress.

As the mid-tier option of the 3 configurations of this specific miniPC, the main weakness is the 1TB SSD. AI models are in the tens of gigabytes, and with certain AI related applications unable to store data on other drives, this means a lot of space is going to be used on the primary drive. Even worse, quality 2TB drives have not hit the $100 or less mark, so replacing the drive is not an easy task, even if you flip the original drive on the used market.

However, the 64GB of RAM means that you can allocate at least 32 GB to the GPU, which will affect AI and graphics workloads. (Confusingly, Minisforum does not provide a 48GB RAM option.) This RAM is slower than LPDDR5X soldered to a Strix Halo or some competing Strix Point miniPC motherboards, but you can upgrade it for speed and/or capacity later, which is an advantage over its lifespan.

It also has three NVMe SSD slots, which is good, because the Occulink connector that allows for the use of an external GPU will completely remove the bandwidth for one of the SSDs. This gives the X1 Pro an advantage against other Strix Point based miniPCs, since they tend to have only two NVMe SSD slots.

Overall AI performance is hard to assess, for two main reasons:

- Neural Processing Units (NPUs) are generally not supported by AI software.

- Total AI performance from combining NPU + GPU is not available.

There seems to be a chicken and the egg problem with NPUs. The hardware vendors expected the open source AI developer community to develop the software integrations for the NPUs, but that never happened. So now the vendors (AMD and Intel) are developing the software required to run AI on the NPU.

Since this is the best NPU outside of Strix Halo, I plan to test NPU only and NPU + GPU performance where possible.

In terms of price, this graph tells the story quite well:

The device has basically ping-ponged between a few cents below $1000 and a few cents below $1250, with occasional coupons to bring it back down to $1000. (Camel Camel Camel does not seem to track coupons.) Therefore, buying it during the Florida sales tax holiday on computer hardware under $1500 made this the best price/performance option I could choose.

The Alternatives

I had two main other options:

- Gut an old HP office desktop and replace the internals.

- Build a new small PC from scratch.

The old HP desktop was procured somewhere in the 2012-2013 time frame for use by grandmother, and came into my possession after she moved several years later. With a barely adequate for the time Intel i3 processor, the case was of an ideal size to house powerful hardware, while also serving as a mounting place for its own monitor. However, there were two main problems.

- The case only has half-height slots for PCI-E devices.

- The non-standard form factor power supply.

Despite supporting regular sized motherboards and a great deal of internal volume, the case's two flaws are killers. Half height PCI-E slots dramatically reduce the number of add-in devices you can use in you computer, especially graphics cards, which are useful for AI and other work loads.

Even worse is the power supply, which uses the expensive and lower availability TFX standard. With a 180 watt power rating and a single SATA power connector, this would have to be replaced with at least a 300W power supply to accomplish anything useful.

Since that would leave me with nothing but a case, I decided to look at building a new PC from scratch.

Because I don't live in Miami, I don't have access to MicroCenter, a brick and mortar PC hardware store that has many great deals on a regular basis. Doing some quick research, I found that ~$300 was the minimum price I would expect for an M-ATX motherboard and CPU combination, especially if I wanted WiFi. Minisforum motherboards with integrated high-end mobile CPUs like the BD795m or the BD795i SE offered incredible CPU price/performance, but cost between $364 and $384, while lacking WiFi.

In fact, the big problem with building a PC at the moment is that to build anything good, one needs to buy a 16GB GPU. And since that is currently highly in demand due to years of VRAM undersupply, even the cheapest models cost above $300.

So the costs added up quite quickly, and since I didn't necessarily need GPU performance as much as VRAM, something that came with all the hardware and good enough graphical performance became a more appealing option.

Setting up the X1 Pro

Once I received the miniPC from Amazon, I began configuring it for my uses.

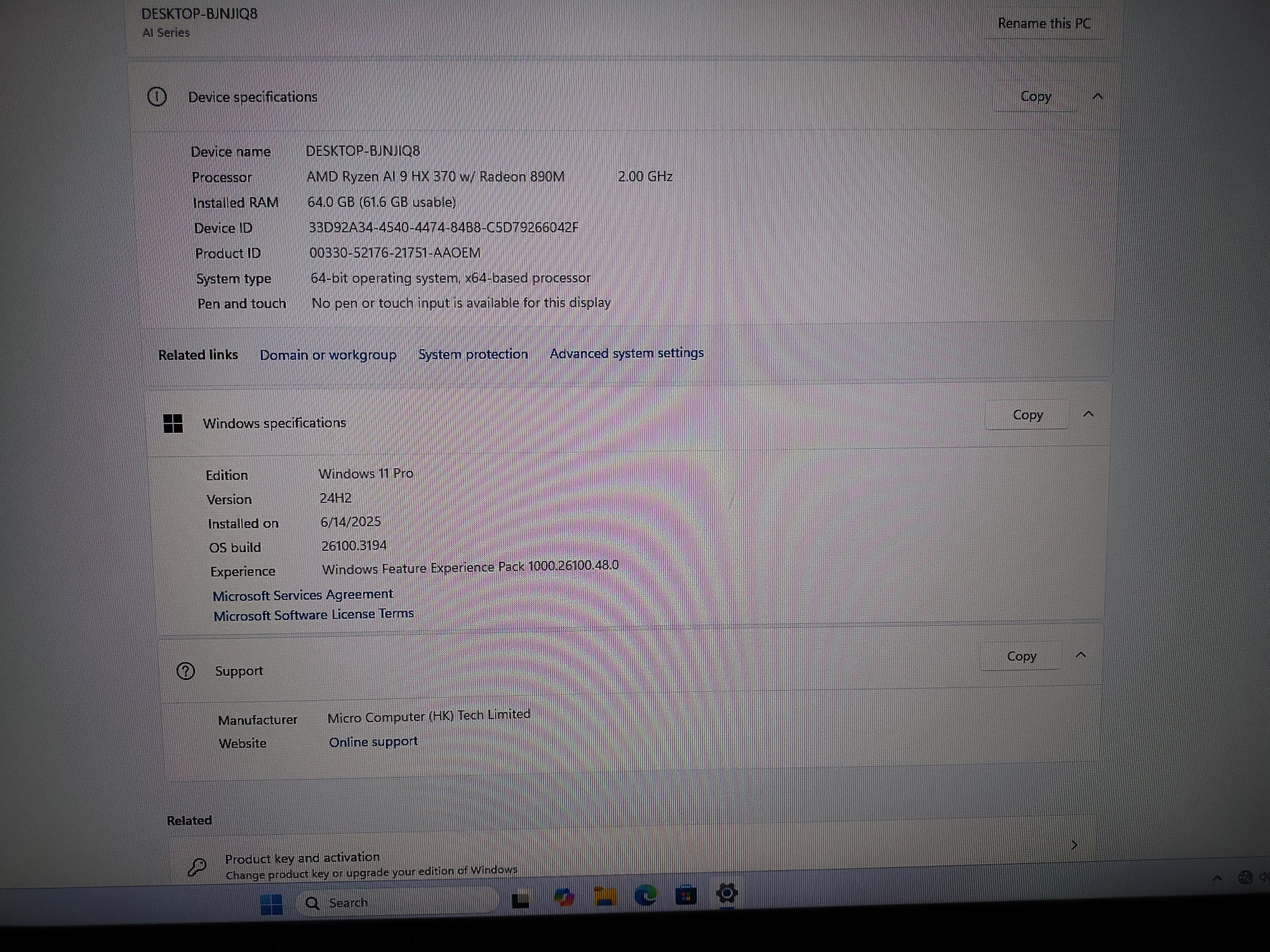

The first thing I did was run through the Windows 11 setup to figure out which version of the OS was installed. The store listings and reviews didn't specify which Windows 11 version Minisforum installed on the PC, which prevented me from preparing a clean, optimized version of the OS to install. Checking the About PC menu, I discovered the following:

Armed with the knowledge that I needed Windows 11 Pro, I followed this Chris Titus Tech guide to download and optimize a Windows 11 Pro ISO. I also copied my laptop's installed software using Chris Titus' WinUtil.

Once I had the ISO prepared, I placed it on my Ventoy USB stick, and installed it on the X1 Pro, overwriting the existing installation. Unfortunately, there were device specific drivers that I had to obtain to reactivate the WiFi, which slowed things down.

Once those were installed, I updated Windows and obtained the latest AMD graphics and chipset drivers. Following a reboot, I imported the laptop's software list, deselected a few software choices, then auto-installed everything via WinGet. After that was implementation of the recommended settings to disable telemetry and other background tasks.

The process took a little over 3 hours, primarily due to the need to find an alternate, safe download source for Windows 11.

In addition, I made sure to access the BIOS/UEFI and set 32GB of RAM to be dedicated GPU memory. The AMD driver software stack does allow for dynamic allocation of RAM between the CPU and GPU, but I felt it prudent to ensure that there was a 50/50 split in case of a workload that heavily utilized both components.

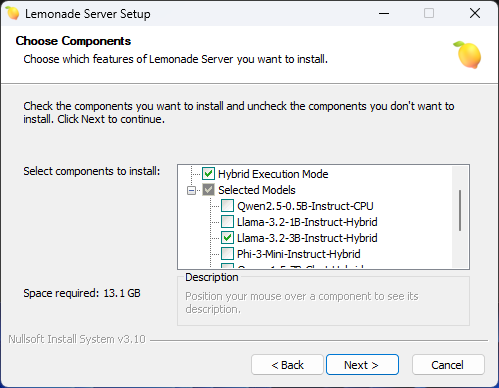

Afterwards, I installed Lemonade Server, an AMD developed piece of software for hosting and running local LLMs that use the various processors on the machine. Most importantly, it can use the NPU and integrated GPU (iGPU) to run LLMs. This is a rarity in the software landscape.

However, installing it was a bit of an ordeal, as the Windows installer made use of PowerShell scripts. This required reconfiguring the Windows Developer settings, something that I did not remember to do on the first attempt. Also annoying was the default setting for the target directory, which I overrode, and the target for LLMs, which I did not and had to pin to Quick Access.

To serve as a Retrieval Augmented Generation system and LLM interface, I installed AnythingLLM and copied three sets of documents from my gaming rig to the new system. Since Lemonade Server uses the OpenAI API, I can easily connect the LLMs running in that program to AnythingLLM, albeit with an annoying process to change the models. (Since AnythingLLM doesn't have specific Lemonade support, going through the OpenAI API requires manually changing the model every time you load a different model.)

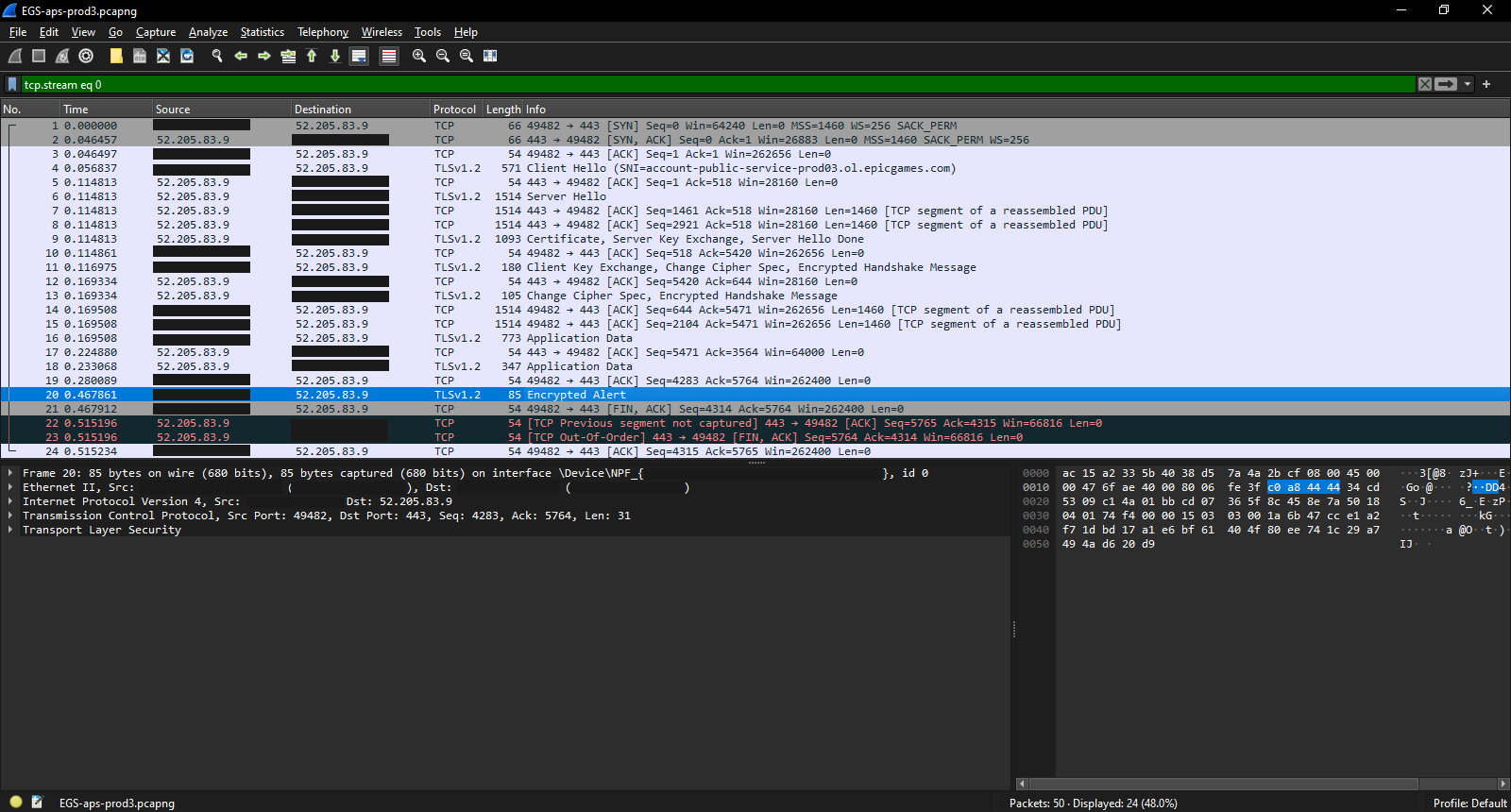

I had to spend 10+ hours installing an Unreal Engine 5.5.4 modding Software Development Kit (SDK). This was due to two main factors:

- Epic Game Store's inability to detect/add EGS library folders and associate the files within those folders with the library on that specific machine.

- Installing to a SATA SSD over USB 3.0.

Once that was complete, I was done setting up the X1 Pro for my intended uses.

Stress Testing the X1 Pro

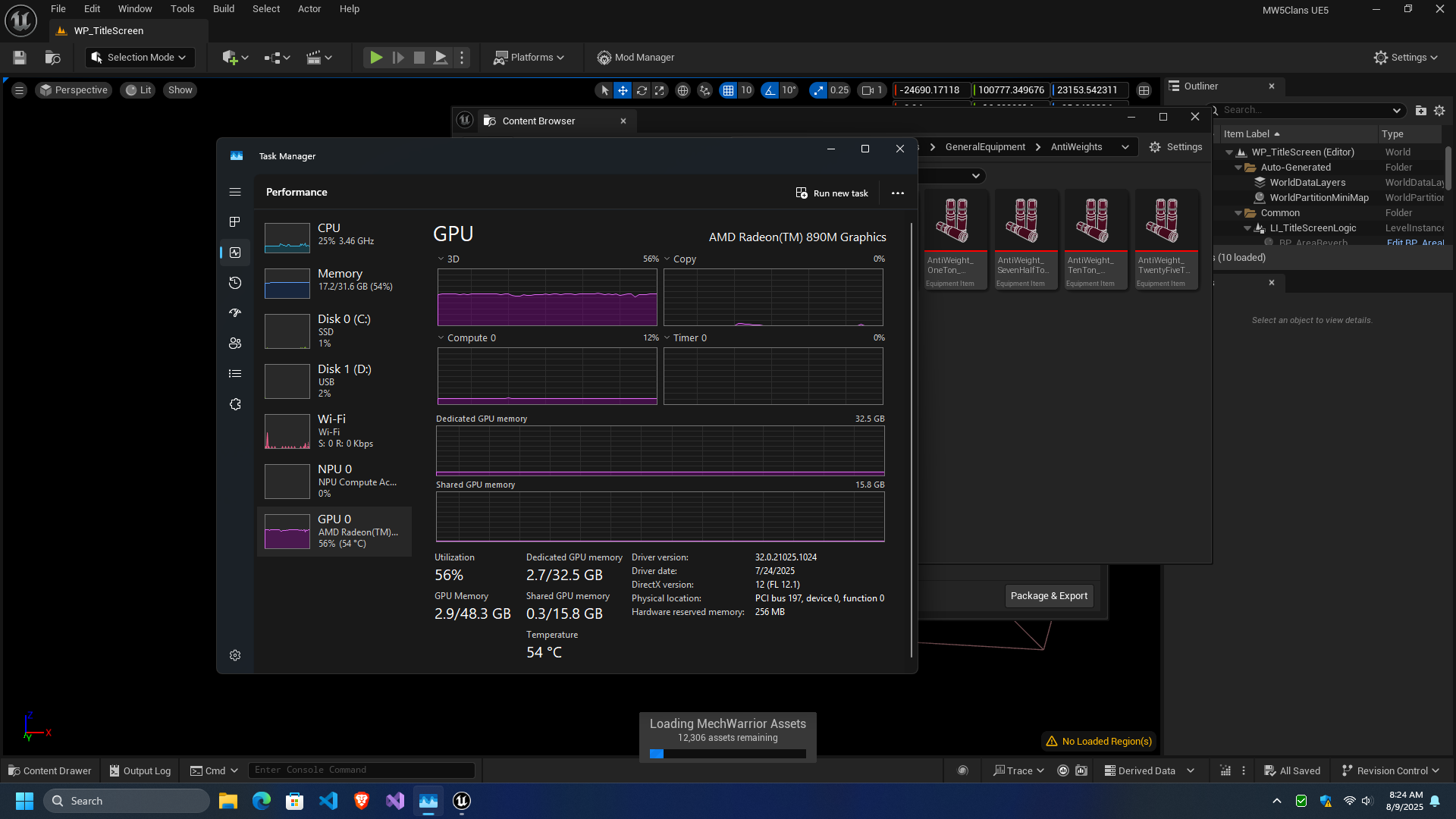

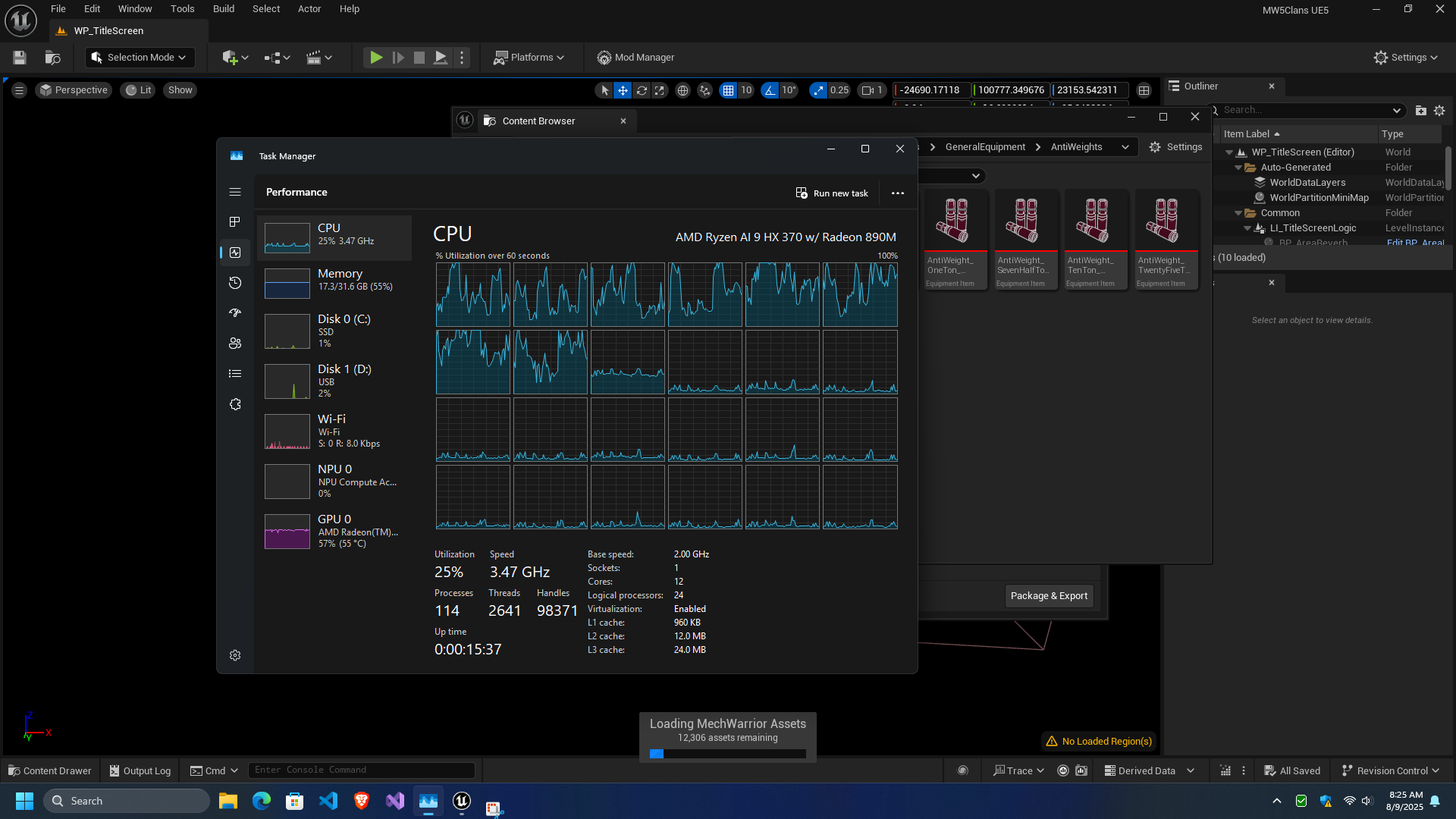

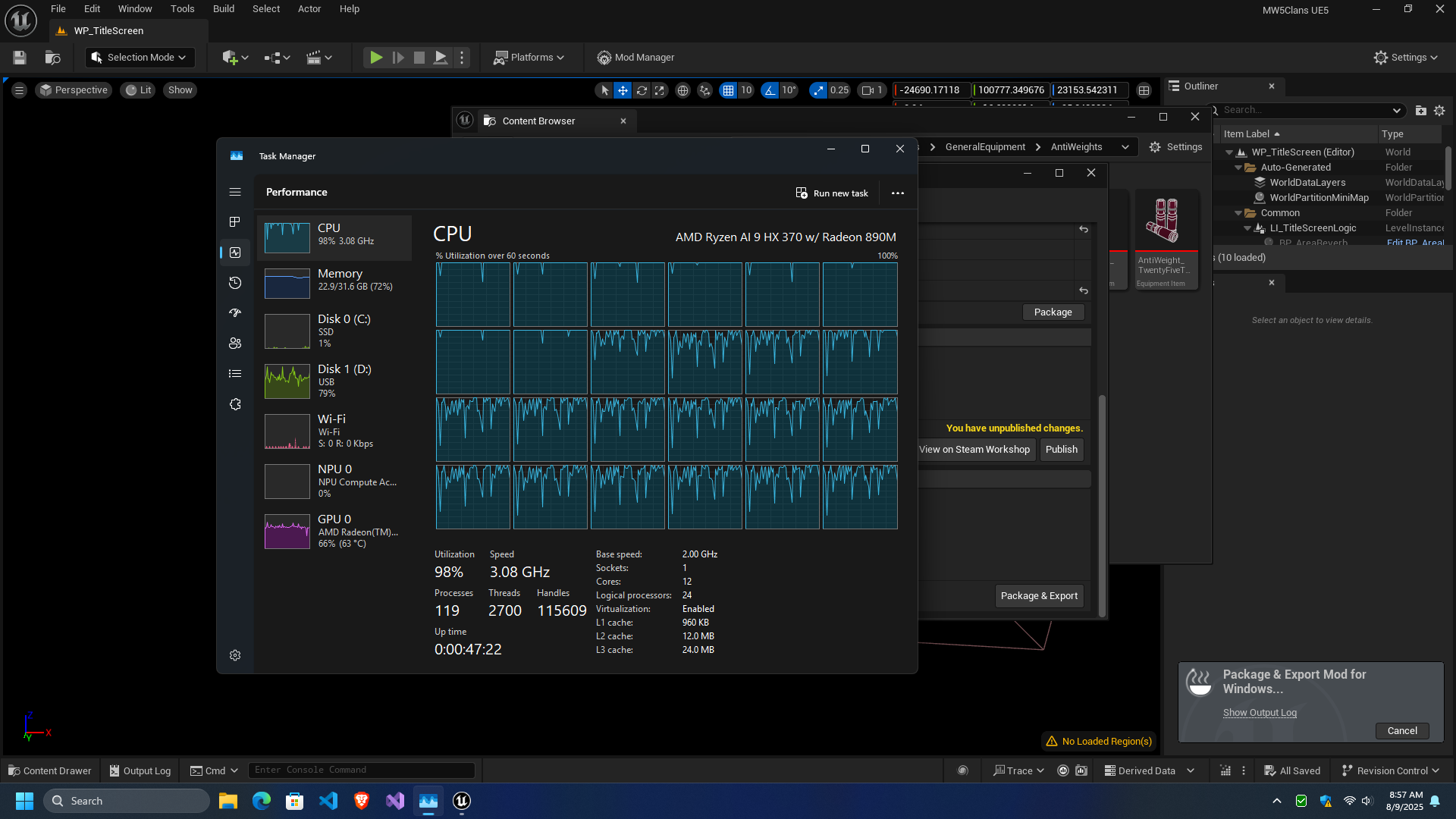

I decided to take a two pronged approach to initial stress testing of the miniPC. The first prong was to run Unreal Engine 5.5.4, in the form of the MechWarrior 5: Clans modding SDK. The software has a pretty heavy CPU and GPU load even on a desktop system, like my Ryzen 7 7800X3D + RX 7900 XTX system, so if the miniPC can run it in any capacity, that is quite impressive.

As I discovered, the X1 Pro can run the modding SDK, but the load on the hardware is highly variable and depends on where in the process you take readings. For example, while GPU usage is consistently in the 50-60% range, CPU utilization can spike violently in the initial load process, even hitting up to 80+% at some points.

However, the most punishing workload is packaging a mod for release. In this case, I had a simple mod consisting of two fairly small image files and around two dozen files that would generate shop items. Generating the files to make the mod playable essentially maxed out the CPU. In fact, when I did the same process a second time, but included a step to push the files to Steam Workshop for hosting, the process caused the GPU driver to crash at some point after the upload completed.

It's not entirely clear why the GPU driver crashed, but a bug report was submitted to AMD. It's possible that thermal throttling contributed to the problem, which may require a third party application like Universal x86 Tuning Application to address:

The second prong of the testing was LLM performance with a repeatable task. To do this, I intended to try NPU + GPU and GPU only performance by submitting this prompt to an LLM:

revise this code for FreeBSD to ensure iwlwifi0_wlan is enabled and remains functioning at all times:

```

#!/bin/sh

#

# PROVIDE: wpa_supplicant

# REQUIRE: NETWORKING

# KEYWORD: shutdown./etc/rc.subr

./etc/network.subrname="wpa_supplicant"

desc="WPA/802.11 Supplicant for wireless network devices" rcvar=wpa_supplicant_enable

ifn="2"

if [ -z "$ifn" ]; then

return 1fi

if is_wired_interface $(ifn) ; then

drivers="wired"

else

drivers="bsd"

fiload_rc_config $name

command=$(wpa_supplicant_program)

conf_file=$(wpa_supplicant_conf_file)

pidfile="/var/run/$(name)/$(ifn).pid"

command_args="-B -i -ifn -c $conf_file -D $driver -P $pidfile" required_files=$conf_file

required_modules="wlan_wep wlan_tkip wlan_ccmp"run_rc_command "$1"

```

Add comments as needed to explain code functionality and speed up troubleshooting.

Unfortunately, version 1.8 of Lemonade Server is missing a dependency in its installer, which prevents NPU + GPU operation on Windows. Annoyingly, v1.8.2 keeps getting flagged as a trojan, despite Virus Total saying the .exe file is clean, preventing me from updating and seeing if that corrects the problem.

So I was only able to conduct GPU only testing, with a tuned version of OpenAI's open source model: Openai_gpt-oss-20b-CODER-NEO-CODE-DI-MATRIX-GGUF. I used AnythingLLM for the conversation GUI and stock settings on the X1 Pro. On the 7800X3D + RX 7900 XTX system, I used LM Studios and a special creative reasoning system prompt. On both systems, Llama.cpp's Vulkan code execution was used. The results were as follows:

| System | Tokens/Sec | Tokens | Time to First Token |

| X1 Pro | 24.22 | 2767 | 0.84713 |

| 7800X3D + 7900 XTX | 135.05 | 1352 | 0.30s |

The X1 Pro is clearly the slower and less capable of the two, with roughly 18% the tokens/sec of the larger, dedicated GPU, and roughly 282% longer time to first token.

However, it did produce way more in depth code, at just over 2x the tokens, which I attribute to an unexpected interaction between the system prompt and the LLM in LM Studio. In terms of the experience of using the LLM, I was impressed by the speed of the LLM once began producing tokens, as well as the quality of said code.

Takeaways

- The X1 Pro is a compromise option that is highly functional in the current constraints of the market.

- However, I do not recommend spending more than $1000 on the 64GB RAM + 1TB SSD configuration.

- The X1 Pro is a space efficient design with good acoustics when the fans spin up.

- Loading Unreal Engine 5 projects with lots of assets is doable, but will heavily load the CPU at various times, and moderately load the GPU.

- I have not tested in-engine gameplay testing, but I do not think it will end well.

- Occasional GPU driver shutdowns can occur when doing strenuous workloads.

- Software support on Windows for the HX 370 processor's NPU is a bottleneck.

- Lemonade Server is an effective tool for running LLMs, despite some dependency and update issues.

- Using Vulkan and Llama.cpp, at least 24 tokens/second can be achieved on the GPU for LLM tasks.

- If time to completion is not a major constraint, lower speed in exchange for more ability to run larger parameter size LLMs is a valid trade off.

Overall, at this moment, my assessment is that the X1 Pro is a good-enough jack-of-all-trades workstation that can get just about any task done, to some level of satisfaction.