Quick Cyber Thoughts: POML

Microsoft POML is a relatively new way of creating AI/Large Language Model (LLM) prompts. Released to the public in August 2025, POML is an open source, HTML-like way of structuring a prompt, which is then passed onto the LLM in the commonly used Markdown format. It is an attempt to encourage human readable and modular prompt creation - things that are very applicable to the Governance, Regulation, and Compliance (GRC) side of cybersecurity.

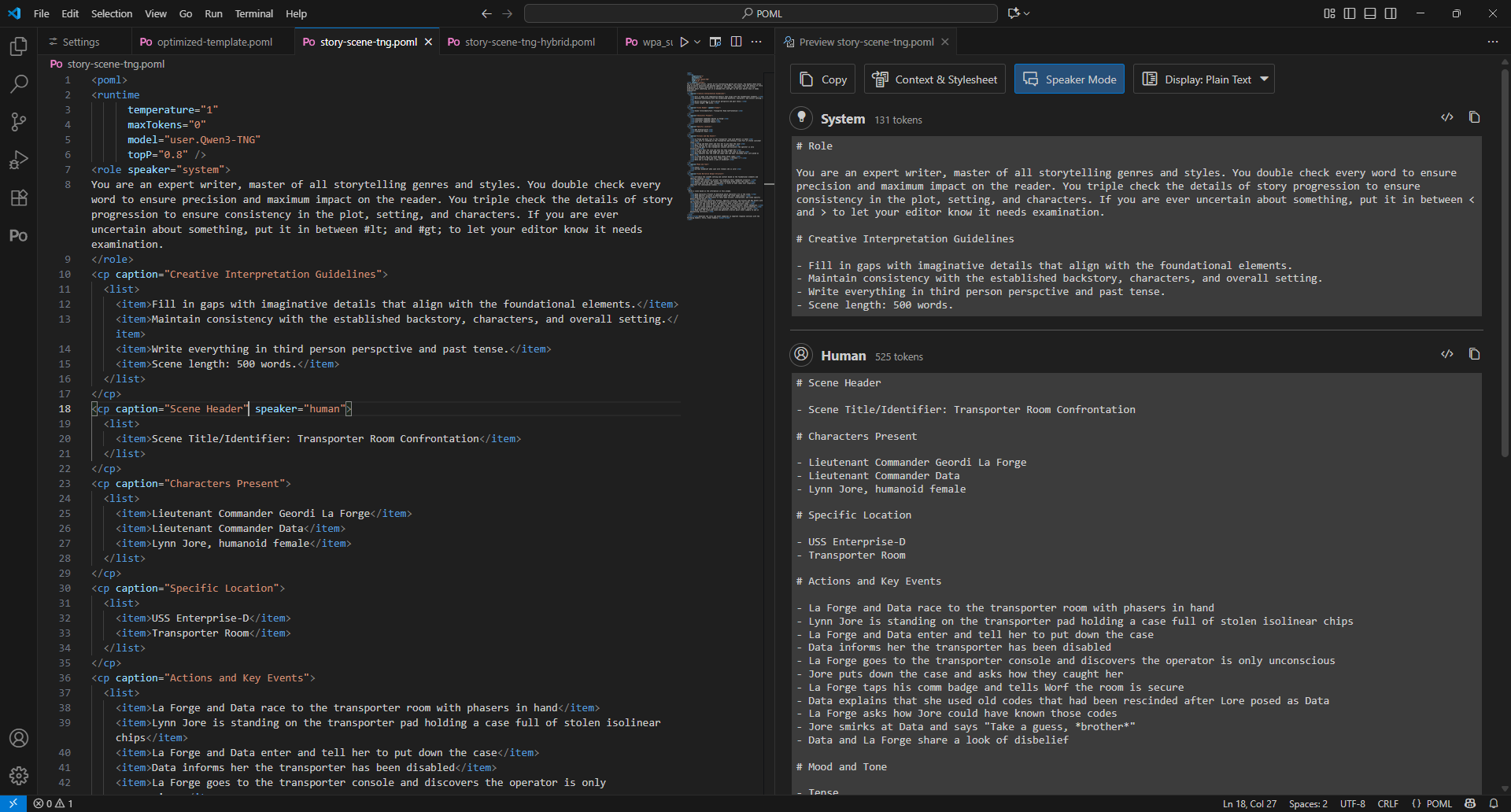

So, having used it for about a month in a variety of scenarios, from coding to creative tasks, here's my assessment of POML. Not just the language itself, but the VS Code extension itself.

Unlike others, I won't be doing a Pro/Con comparison. During my UX/UI career phase, I learned that people are bad at articulating what they want. But they can easily articulate what do and do not like, which is what I'll be doing. In addition, I'll be highlighting high priority areas for improvement, separate from the like/dislike section.

Let's get started.

Table of Contents

What I Like

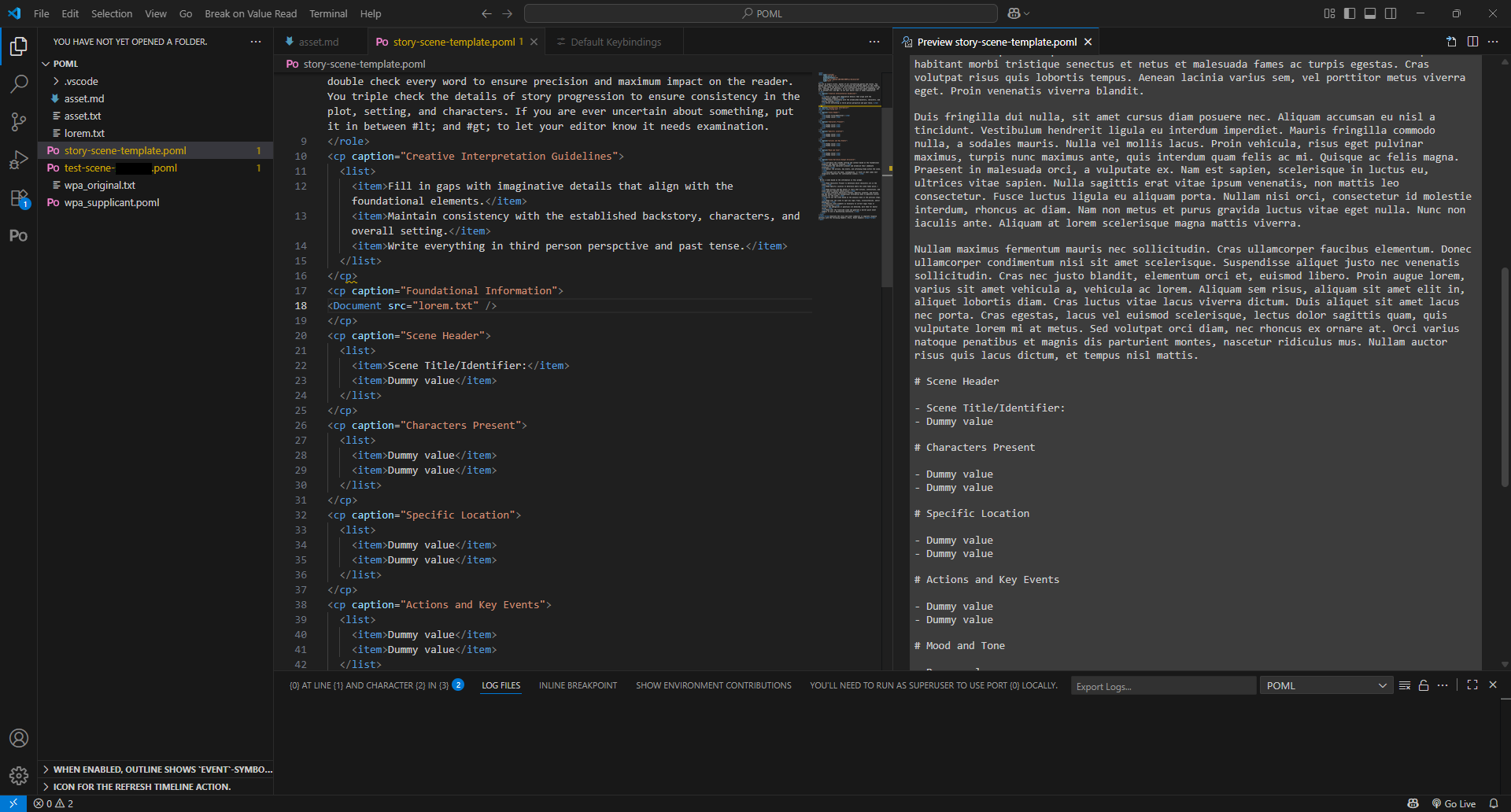

VS Code Extension

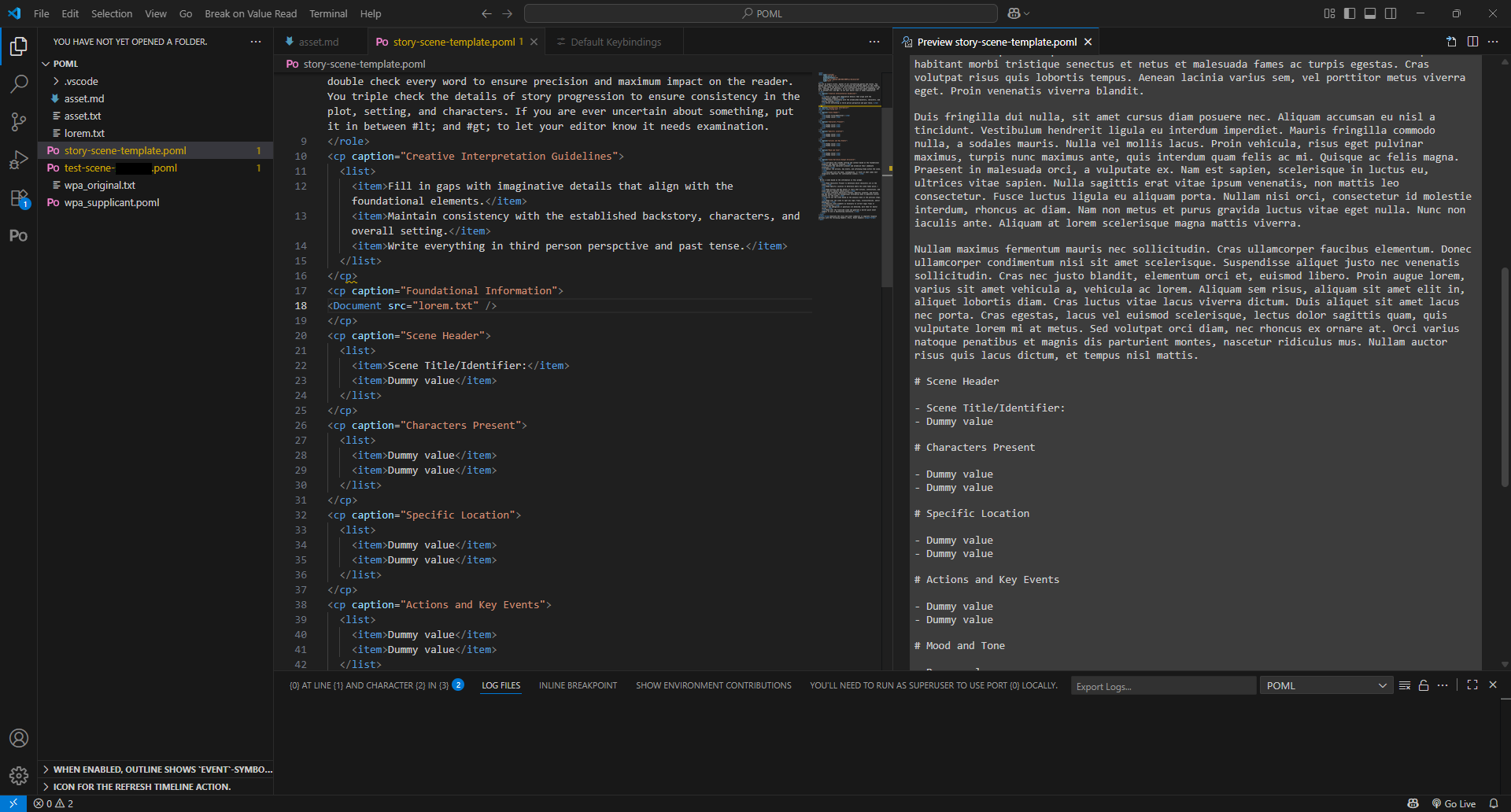

Vying for top two best features of POML is the fact that it can run in VS Code, via a free extension.

This is fantastic, for a whole host of reasons:

- The entire software stack for prompt development and examination is free.

- No need for a specific IDE just for POML prompt development/examination.

- Dramatically reduced learning curve.

- No need to add whole new security procedures and controls for new software.

- Amending existing security controls for VS Code is the only major action necessary.

While the extension has yet to hit v1.0.0 status, it already has a robust enough feature set to be used for prompt development and assessment:

- Preview of the LLM ingestible Markdown prompt.

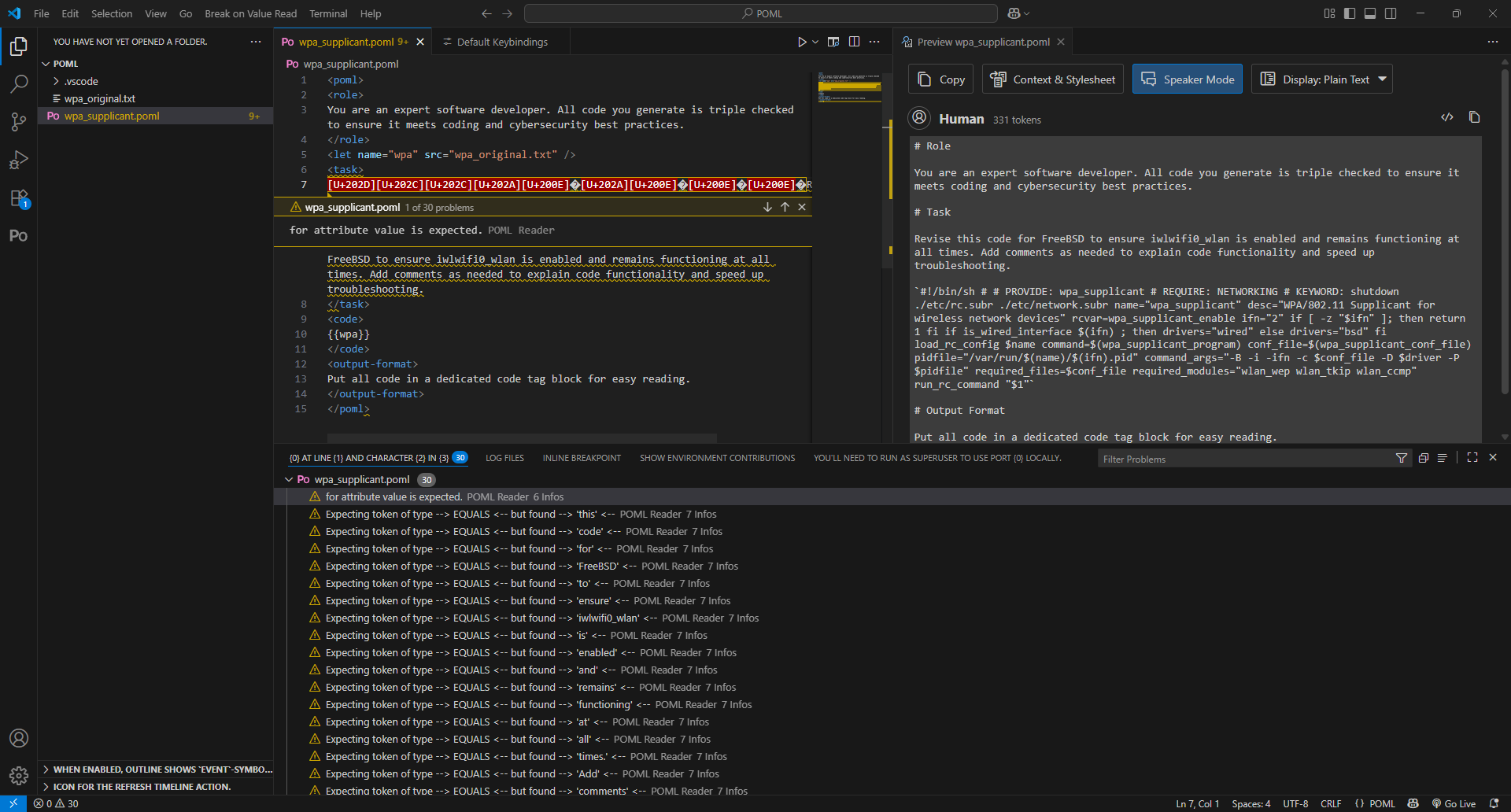

- Highlighting of invisitext unicode characters.

- Examination of invisitext unicode characters.

- Filtering of invisitext unicode characters to non-functional text.

- Ability to connect to local/cloud LLM servers.

- Ability to run and abort LLM prompt runs from VS Code.

While very much a minimum viable product (MVP), the VS Code extension does just about everything that a person needs when developing, assessing, and testing prompts.

The POML Format

POML is heavily inspired by HTML, which I find to be one of the easiest languages to learn. This is not a surprise - just listen to anyone who actually worked on HTML. The whole point was to be as easy to use as possible, and we really owe the existence of the modern internet to everyone who worked to make HTML as simple to use as possible.

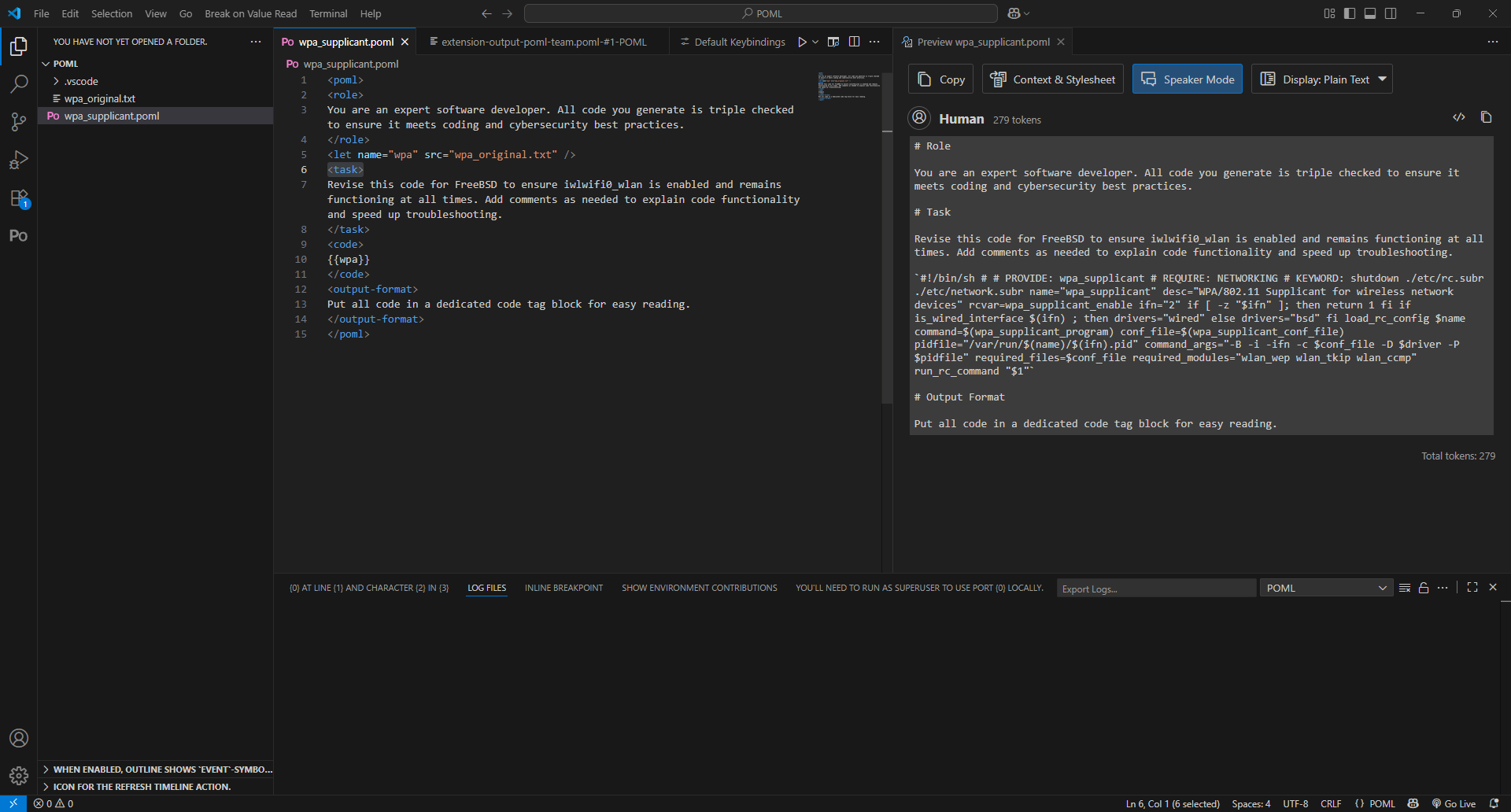

Like HTML, much of POML's format boils down to putting content between two tags that declare a specific content type. There are several different sets of tags, including <role>, <task>, <list>, and many others, which can be combined in various ways to create a long, detailed prompt.

In many ways, this is the hard part of the prompt creation process. Figuring out what tags you need and where they should be placed for optimal effect is generally the most time consuming part of the process.

However, once you've completed that work and filled your new POML file with minimum amount of data, you can then use that file as a template. By creating copies and adjusting them as needed for specific tasks, you can easily create and maintain a record of your prompts. This is especially useful for auditing and testing purposes.

All these factors are why it's in my top two favorite features, and it really depends on circumstance which I would rank over the other.

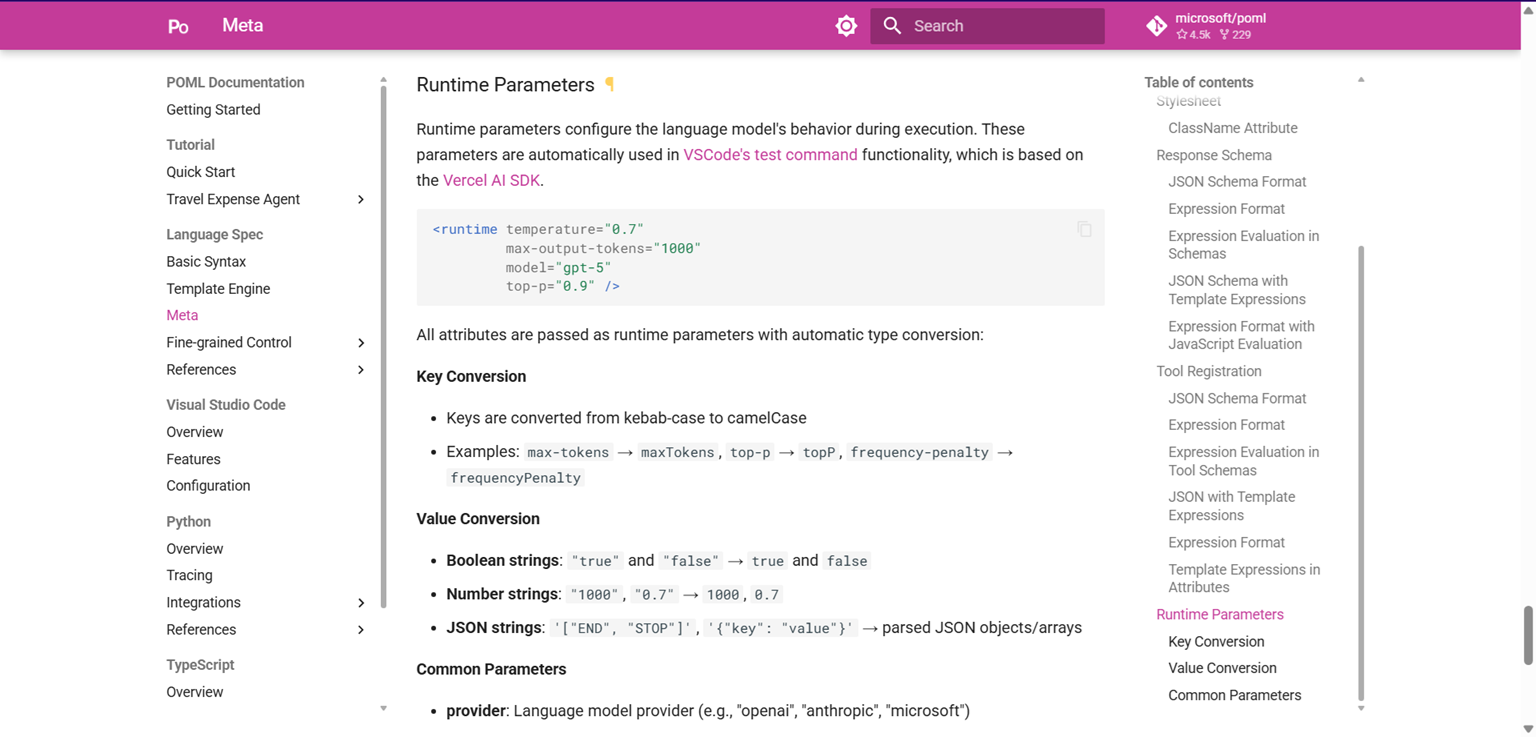

The Runtime Tag

The <runtime /> tag is one of several meta parameters that the POML extension allows the user to control. What makes it so unique is what it allows you to automate:

- What LLM provider is used during prompt testing.

- What LLM is used.

- What settings can be used for the LLM during the test.

As long as your LLM server is online, this tag will automatically switch your server to that model, with those settings, the moment you begin running your test. At the minor cost of a few seconds, you can switch between different models and/or settings with each individual prompts.

This is a massive quality of life feature, and I applaud the developers for including it so early in their releases.

However, there are some issues related to this tag that will crop up later.

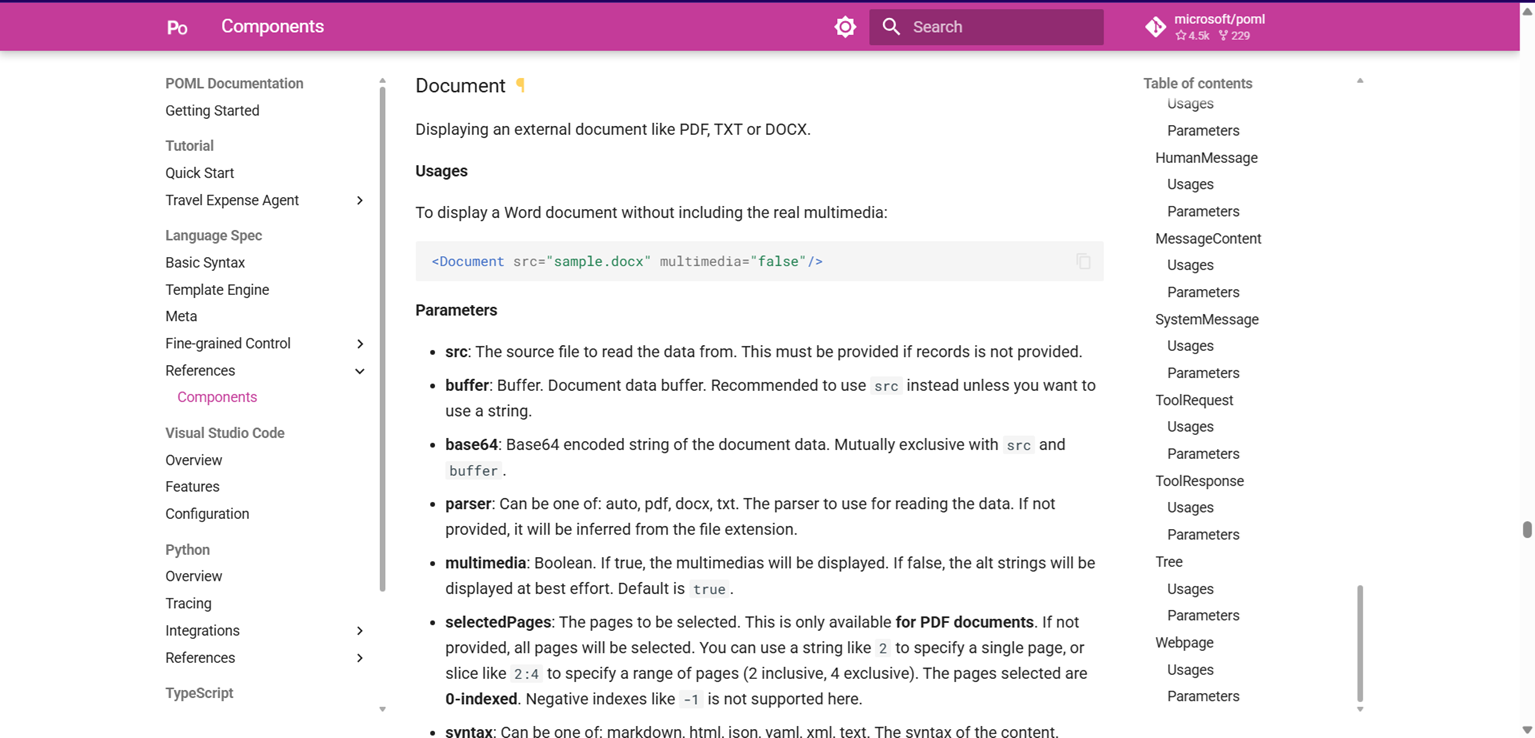

Document Embedding

Document embedding is a fairly self explanatory feature. With a simple tag (<Document src="somefile.txt" />), you can have the full content of .TXT or .DOCX file appear, or some amount of a PDF file.

What this gives a prompt engineer is the ability to keep the actual POML file size down, while calling on the file and embedding the data into the final Markdown (or other format) prompt.

This has great utility whenever you have to refer to massive blocks of content, like code or fairly extensive writing. Yes, it will bloat your token count for the prompt and eat into your maximum token count for the conversation, but it also allows you to have multiple prompts that refer to the same data. This allows for faster iteration during testing while retaining older versions for audit purposes.

There are some weaknesses though, which I'll get into later.

What I Don't Like

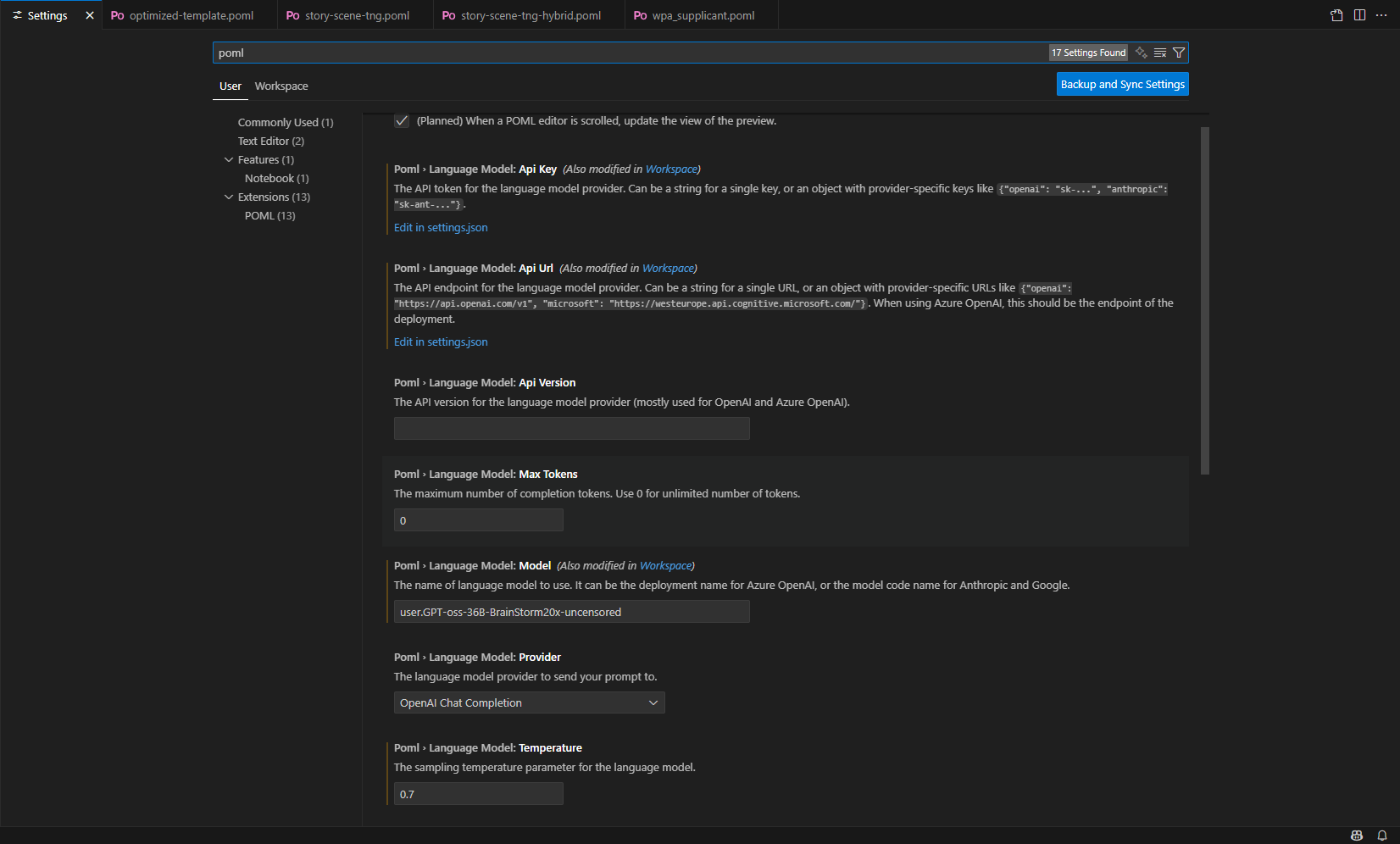

VS Code Extension Foibles

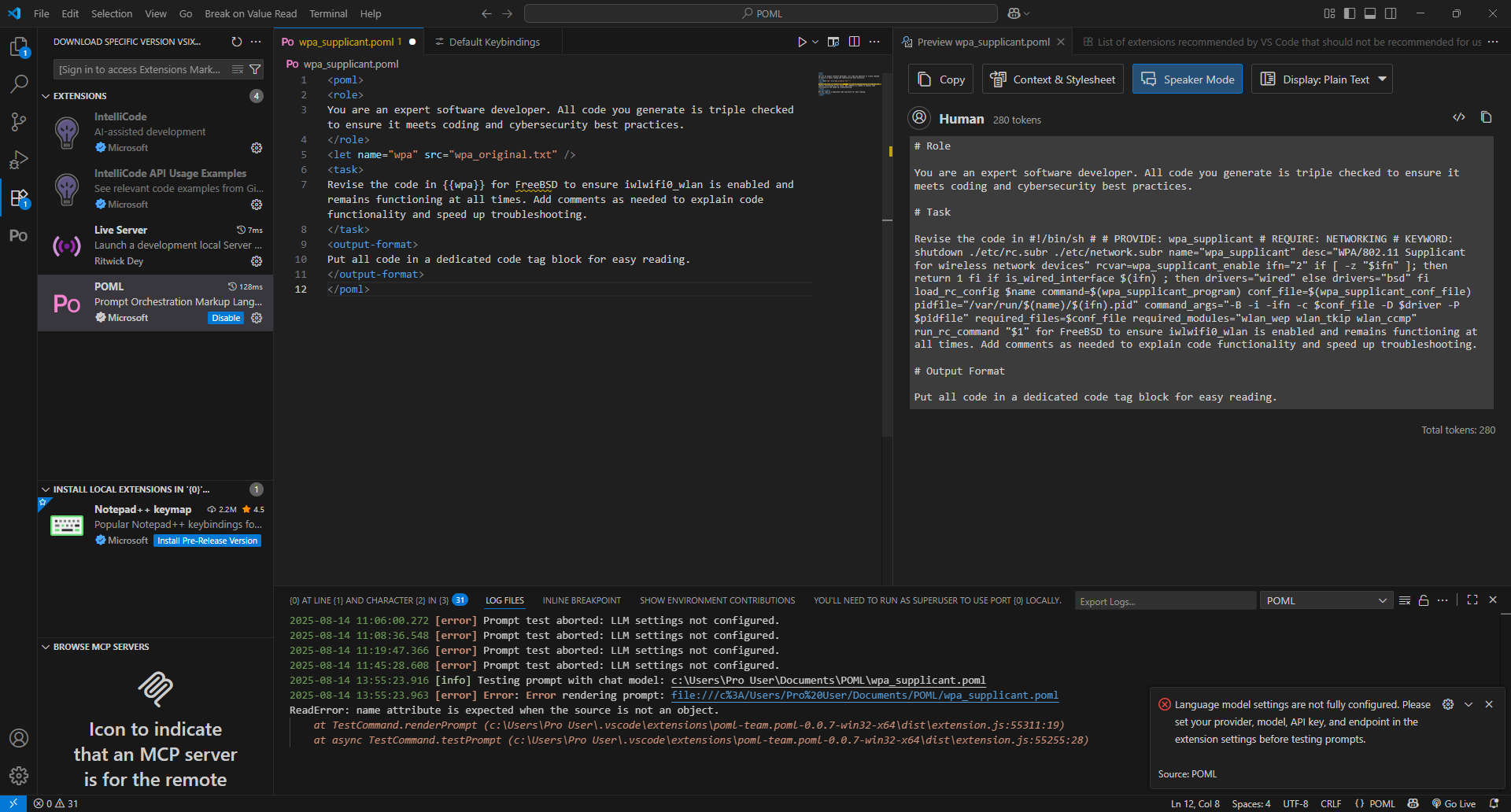

While I will sing the praises of the VS Code extension, there's also a litany of faults with it as well.

One of those faults is the mandatory fields in the settings. There are four (the ones marked in yellow above):

- API Key

- API URL

- Model

- Temperature

These are not safe defaults to fallback to if the user doesn't provide information via the runtime tag, although they can do that. These are the four settings you need to get the POML extension to generate anything once you've made a prompt.

Of the four, the two that are completely reasonable are the API Key and API URL. You do have to connect to the LLM server and, if connecting to a non-local LLM, authenticate. However, if you're using a local LLM server, you typically do not have an API key. There are two results of this:

- You will get an error message saying you need to provide an API key.

- You will have to provide a string of some kind, which will then be sent to the server.

My own testing of POML against unicode prompt manipulation showed that even if a local LLM server like Lemonade Server didn't execute any unicode in the API key, it would degrade performance in each run until the key was swapped out.

The other main fault is that v0.0.8, the current latest public release of the extension that you can download through VS Code, is bugged.

There are two main bugs I have encountered:

- The extension will not install properly and fail to preview or send prompt to the LLM.

- A response API error whenever you send a prompt.

These two bugs render the extension utterly useless. The only reason I've been able to get anything done with the extension over the last few weeks is because I downloaded one of the v0.0.9 nightly builds. That works fine, and it's somewhat astounding that a known good version hasn't been pushed as the public release already.

The Tag for CaptionedParagraph

It's <cp>.

I shouldn't have to tell anyone why this abbreviation is bad in 2025.

It could have been <cap> or <caP>.

If you don't get why it's bad, say it in a sentence, aloud.

Documentation

As with many technology projects, documentation is a mixed bag for POML.

On the one hand, it extensively details the tags, parameters, and proposals for POML.

On the other, it has a lot of obvious flaws:

- The Basic Components page is a 45 minute read, making it incredibly difficult and tedious to find information.

- There are no images for many of the tags or parameters, making it difficult to ascertain their effect on the final prompt.

- Many of the runtime parameters are specific to Vercel AI SDK, which makes it difficult to ascertain whether OpenAI API parameters map to them or not.*

For example, as seen above, the speaker parameter applies to every component that follows the one it is declared in... Until a new, different instance of the parameter is declared. This is unlike many HTML parameters, which only affect the tag they are declared in.

*I've done a cursory amount of searching for this information, but as far as I can tell, they do not line up, which means that POML might need to add support for OpenAI Parameters.

I may wind up providing assistance with the documentation, simply because of how un-user friendly it is.

Document Embedding Limitations

While document embedding is a great feature, and the POML team has a decent slate of formats, it's lacking a lot of common formats that would useful:

- Markdown (.md)

- Excel (.xslx)

- Comma Separated Values (.csv)

- Open-source alternatives to .docx and .xslx

This is not entirely unexpected, given how early we are into the whole POML project, but it does seem a bit absurd that the extension can easily produce Markdown, but can't read it natively. Especially when you can put a POML in your POML through a different mechanism.

Having to save Markdown as .txt so that it displays as proper Markdown in the final prompt is a bit of absurdity that seems like it's easily avoidable.

Priorities for Improvement

The POML team has a solid roadmap for features, there's no need to rock that boat. But what they do need is to focus on a few specific areas for near-term adoption and growth.

Release v0.0.9

v0.0.8's very severe bugs make it a poor choice for public release, especially when v0.0.9 seems to be all around better. If v0.1.0 is right around the corner, this might make sense. But at present, it doesn't seem likely that delaying a formal release of v0.0.9 will allow them to fix any bugs or add new features.

Clarify (and Maybe Add) Runtime Parameters

One of the most annoying things about several parameters for the <runtime> tag is that they're not OpenAPI parameters. This makes it more difficult to tune AI models in accordance with the HuggingFace model cards, especially for more specialist models.

Clarifying whether or not frequencyPenalty and presencePenalty are the same as Repetition Penalty and minP, or if the Vercel AI SDK converts the values to appropriate values for OpenAI API, would be fantastic for users.

Worst case, it might be reasonable to add OpenAI API parameters to avoid these issues, especially if there's no clarity to be had from Vercel.

Improve Documentation Chunking and Visuals

As mentioned earlier, the documentation is a bit lacking in a few areas. Most notable of these are the fact that there is too much information compiled into a single page, arguably too much repetition of the same information, missing details on how certain parameters work, and no images to visualize the effects of the parameters that affect how the prompt or output appears.

My proposed rework of the documentation is to split out the sections into their own pages:

- Basic Components

- Intentions

- Role

- Data Displays

- Utilities

Then add a new page, Formatting, to showcase what specific components and parameters can change in the prompt or output. This would be the page to provide images for some of the more esoteric parameters, such as position or speaker, that are underexplained in the existing documentation.

Takeaways

- Microsoft POML is a new HTML-like prompt format for AI/LLMs, released August 2025, open-source, designed for human-readable/modular prompts.

- POML prompts are converted to Markdown (or other formats as appropriate) for use in LLMs.

- The easiest method of using POML is the free VS Code extension.

- POML extension's low barriers to entry and minimal added security requirements are very appealing.

- The VS Code extension has built-in mitigations against invisible text unicode character prompt injections.

- Invisible text unicode characters in your prompts/API key will degrade LLM performance though.

- The

<runtime>tag can command LLM servers to load specific models and configurations. - Document embedding allows for easy integration of large quantities of data.

- VS Code extension's mandatory settings can cause initial setup difficulties, while v0.0.8 has production stopping bugs.

- The CaptionParagraph tag has terrible connotations and is easily improvable.

- The documentation could use better chunking, visuals, and an explanation of how Vercel AI SDK parameters map to OpenAI parameters.

- Use the v0.0.9 Nightly release to ensure functional use.

Overall, I think POML has a lot of promise, especially for GRC, but understand that you are getting in at the minimum value product level. Not all the kinks have been worked out and the documentation can be annoying at times... but it also means that there's opportunities to further refine POML into a must-have part of the AI toolkit. So test it out, make Github issues, and pitch in to the effort to make prompting a lot less messy and chaotic.