Quick Cyber Thoughts: AI Coding Security

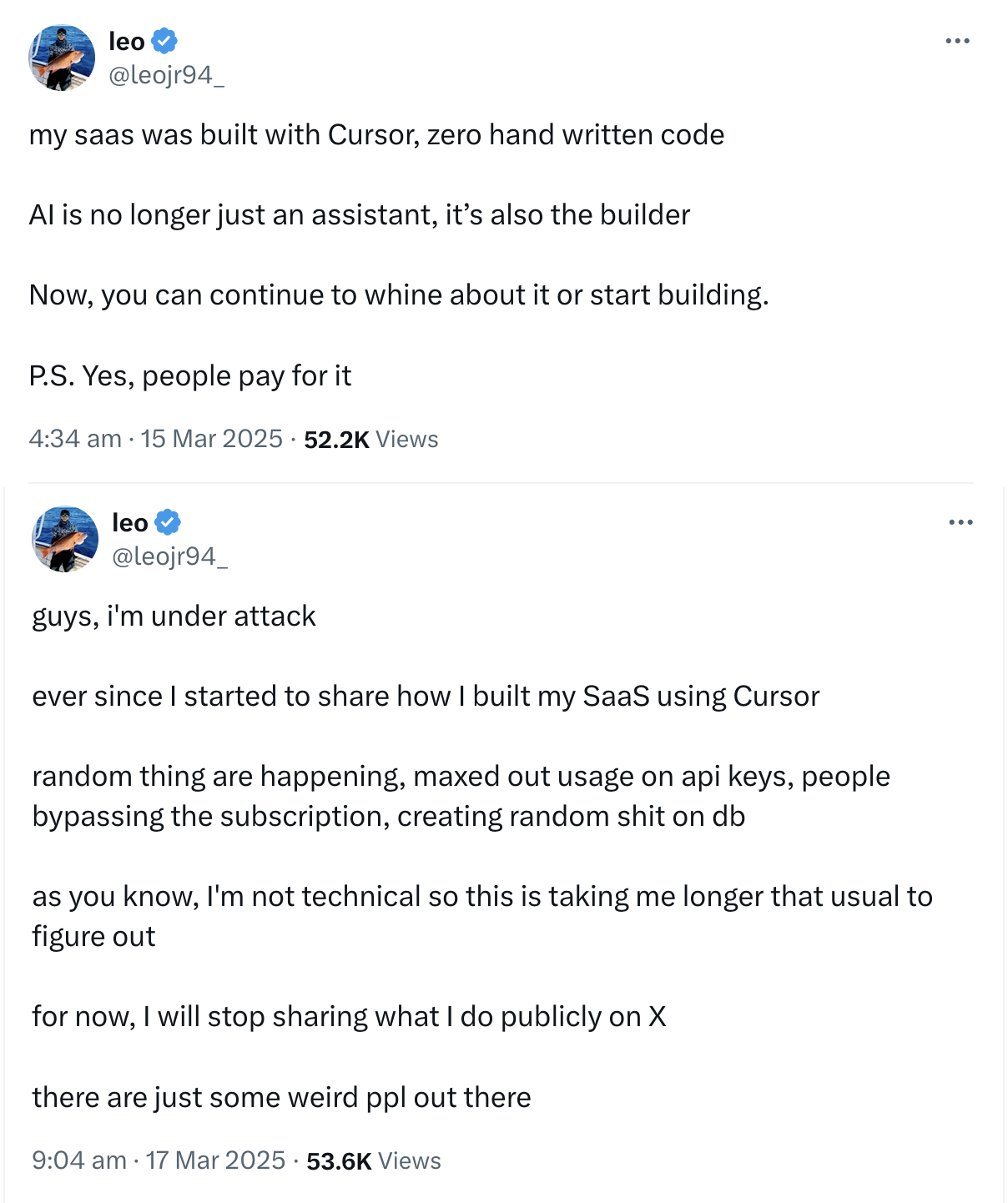

There's an image that's making the rounds on social media this week, and it's pretty funny, as well as scary:

On the one hand, this gentleman got a first hand experience in why OPSEC and secure coding is essential.

If you make a web exposed system, people will come and poke at it, for fun and curiosity.

On the other, it highlights a very pervasive flaw with software development and coding: people either don't know or don't care about security.

This has been true for decades, but what LLM based AI* coding does is lower a massive barrier to entry. Now, a person merely needs to lay out the goals of a program to an LLM, and it will do most of the hard work of figuring out how to things for you. If you're good at figuring out the logic of what you want to do, plus have some technical knowledge to help get really specific, you can come up with some good or great results.

*I personally feel we should call them "Virtual Intelligence", like in the Mass Effect games, because they simulate sapience, but are not sapient. (As far as we know.)

So, how do we improve the security of AI generated code.

Table of Contents

What We're NOT Going to Do

Contrary to what you might think, the best way to solve this is not user education.

That does not mean that user education is useless. It almost certainly will make AI augmented coders better at their jobs. It's just that we're going to get more benefits from designing a procedural solution, rather than an end user solution.

The reason we're not going to rely on user training is simple: cognitive load. Expecting people to pump out perfect prompts each and every time they use an LLM is foolish, and unrealistic. Why? Because some types of generative LLMs are better at processing natural language than others.

For instance, the featured image of this article was generated with Stable Diffusion 3.5 Medium. In my experience, and looking at sites like Civit.ai, which specializes in showing off image generating models, the prompt syntax is completely different from text based LLMs. They can't handle a normal sentence, or even a list of bullet points, just short phrases.

To get the best results, we would not only have to have the user remember the best prompt formatting per model, but also all the possible software flaws and vulnerabilities their software might be vulnerable to. That's a tall order, and something that would be better suited to automation.

Craft System Prompts Targeting Secure By Design

So, what's a system prompt?

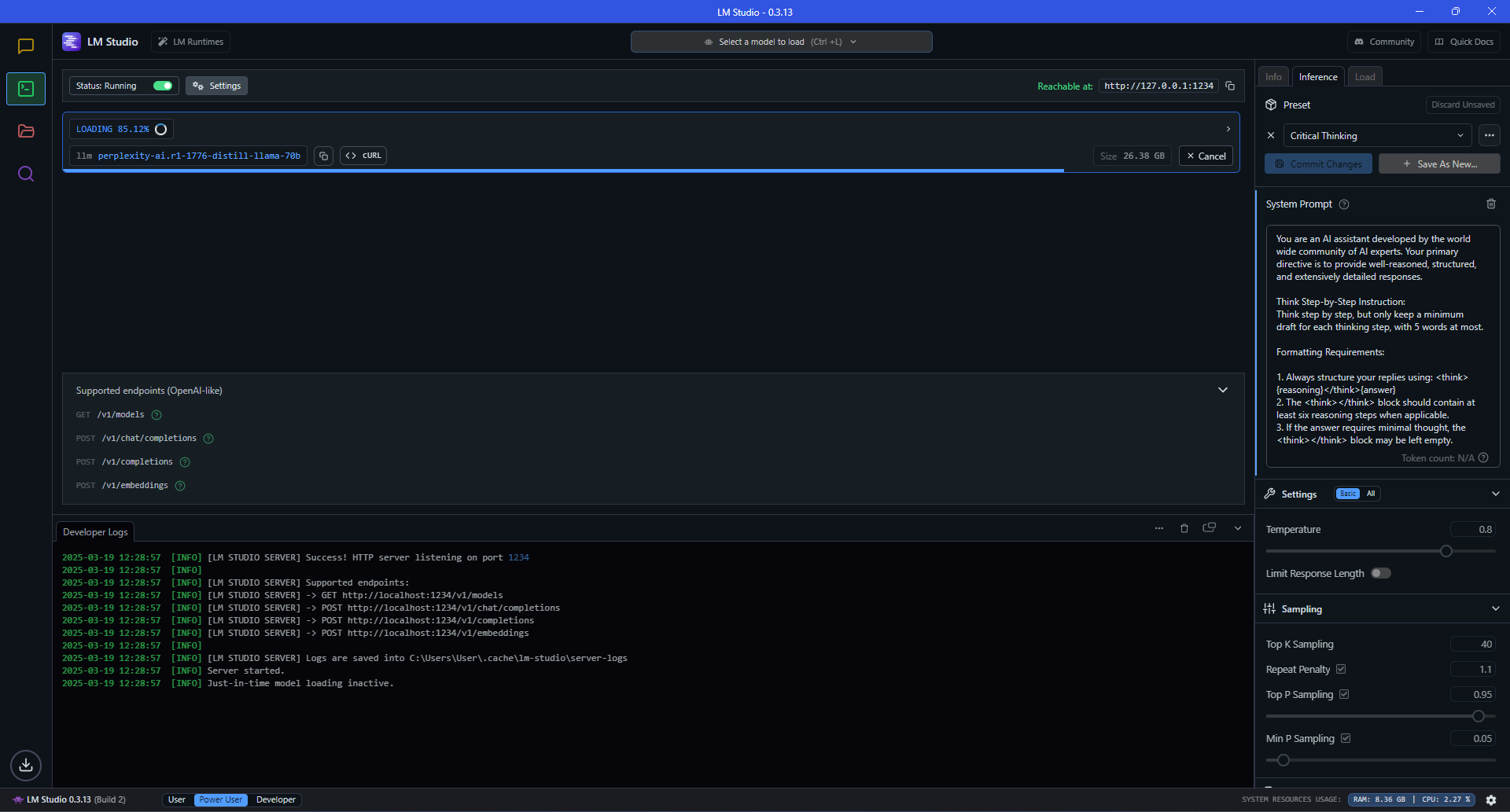

Basically, it's a set of instructions that you can inject into every conversation/interaction with the LLM, IF the software you use supports it:

In the above image, there's a system prompt that controls how the model is supposed to handle critical thinking:

You are an AI assistant developed by the world wide community of AI experts. Your primary directive is to provide well-reasoned, structured, and extensively detailed responses.

Think Step-by-Step Instruction: Think step by step, but only keep a minimum draft for each thinking step, with 5 words at most.

Formatting Requirements:

1. Always structure your replies using: <think>{reasoning}</think>{answer}

2. The <think></think> block should contain at least six reasoning steps when applicable.

3. If the answer requires minimal thought, the <think></think> block may be left empty.

4. The user does not see the <think></think> section. Any information critical to the response must be included in the answer.

5. If you notice that you have engaged in circular reasoning or repetition, immediately terminate {reasoning} with a </think> and proceed to the {answer}

Response Guidelines:

1. Detailed and Structured: Use rich Markdown formatting for clarity and readability.

2. Scientific and Logical Approach: Your explanations should reflect the depth and precision of the greatest scientific minds.

3. Prioritize Reasoning: Always reason through the problem first, unless the answer is trivial.

4. Concise yet Complete: Ensure responses are informative, yet to the point without unnecessary elaboration.

5. Maintain a professional, intelligent, and analytical tone in all interactions.

This is actually pretty easy to do in local LLM instances, since you can do it through whatever GUI you're interacting with.

Cloud based AI is much more of a mixed bag, even if you have an account. For example, the "Customize ChatGPT" options are not entirely clear on what they are doing. Theoretically, they are injecting extra details into the default system prompt, but there's no way for a normal user to verify this. The closest I can get is this quick and dirty test, after adding a Chain of Draft prompt into the "What traits should ChatGPT have?" field:

The steps and their format is clearly evidence of Chain of Draft thinking, but for some reason, it's ignoring the the back half of that command. ("Think step by step, but only keep a minimum draft for each thinking step, with 5 words at most. Return the answer at the end of the response after a separator ####.")

(For more information on Chain of Draft, check out the video below.)

So the first step is to verify that you (as a user or an organization) have access to the system prompt.

The next step is to figure out what your threat profile is, and craft your prompt to deal with it. Theoretically, you could craft a system prompt for a coding LLM that handles every single possible vulnerability - or at least addresses the major categories. Whether this is a good idea is up to debate - if you have better LLM inferencing capabilities, you might be able to shrug off the performance penalties of such large, detailed system prompt.

If possible, it may be better to create a number of tailored system prompts for specific use cases. For example, having an Apache code system prompt that's tailored to prevent SQL injection and/or cross-site scripting attacks, and a separate system prompt for API development to prevent common vulnerabilities. This does create a training/procedural issue in that you have to have train your coders to switch system prompts or accounts whenever they have to work on a specific type of code. But this is more manageable than expecting every coder on your staff to craft the perfect prompt every time they use AI.

The final step is to use a reasoning model, as they are designed to go through a process in a step-by-step manner, which tends to work quite well with coding.

Now, this does not guarantee you will generate perfect, secure code. LLMs are basically very advanced predictive text generators. So what you are doing is raising the probability that the generated code has more security features than none at all.

You will still need code review, human and/or automated, but the baseline quality of the code should be higher. (Again, this depends on the model, especially if you're running quantized models to save space.)

Luckily, we're still at the early phases of using LLMs for productivity, so we can conceptualize a better way of doing things and work towards that.

A Better AI Coding Process

We're going to start out with defining some terminology. Specifically, the word "agent", because that's become a big buzz word in the "AI" field.

We'll be using OpenAI's definition of agent, which is as follows:

A system that can act independently to do tasks on your behalf.

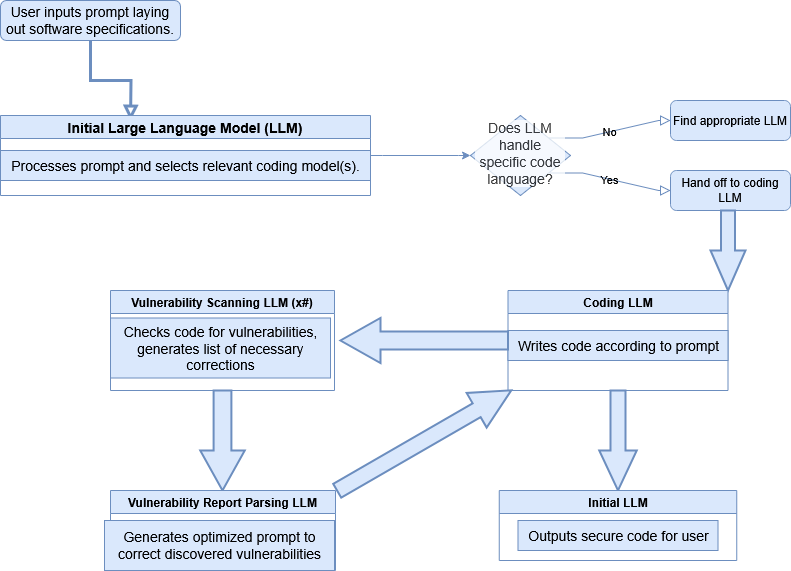

So for handling secure coding, an agent based procedure might look something like this:

- User inputs prompt that lays out the desired software specification (features, file paths, data to use, etc...)

- An initial LLM processes prompt, selects a coding specialized model to handle the task, and optimizes the prompt for that LLM.

- An agent handles prompt transfer to the coding LLM.

- Coding LLM writes code in accordance with the initial LLM's prompt.

- Another agent transfers the code to one or more vulnerability scanning systems/LLM. (If multiple scanners/LLMs are involved, agents transfer data among them.)

- Another agent passes a complete vulnerability report to another LLM for parsing into an optimized code refactoring prompt.

- An agent passes the code refactoring prompt back to the coding LLM.

- Code loops through the process until most/all vulnerabilities eliminated or mitigated.

- An agent passes the finalized code to the initial LLM for display to the user.

That's a lot of steps, but remember, everything from step 2 onwards is automated.

Now, a complex, multistep process like this does require thinking about the individual parts of the process:

- How much variability/randomness in results (temperature) should be used for code generating LLMs?

- Can system prompts be used?

- Should the initial LLM use a system prompt?

- What system prompts should be used on what LLMs?

- Should the vulnerability scanners be LLMs?

- What number of vulnerability scanners should be used?

- What data format should the vulnerability report be in?

- How many iterations of vulnerability scanning should occur before code is pushed to the user?

- What LLM or Small Language Model should handle parsing the vulnerability report into a code revision prompt?

- Should reasoning steps from each part of the process be exposed to the user, either during processing or at the end?

- Should reasoning steps be logged, but not displayed to the user?

- How should the data transfer agents be coded?

- What protections can be provided for the data in transit?

And these are merely the considerations I can think of off the top of my head. This is definitely a situation where a team of individuals with a wide array of viewpoints and mindsets should undertake a design thinking approach to developing the process. In fact, I believe the MOSCOW method, where the team would determine what the process Must Have, Should Have, Could Have, and Won't Have, would be an ideal way to create these types of processes.

I suspect that a lot of the answers to these (and many more questions) would likely boil down to "What is an acceptable level of complexity for the available resources?" For example, a company with a proper AI server rack setup might be more willing to use multiple LLMs to handle vulnerability scanning. A company reliant on a cloud solution might opt for a single LLM to handle vulnerability scanning, depending on their AI provider's price plans.

Obviously, this would require a good deal of validation, especially from third parties, but if done well, would generate higher quality, more secure code.

Should We Do This?

There's a famous quote from the movie Jurassic Park that honestly should live rent free in everyone's heads:

...your scientists were so preoccupied with whether or not they could that they didn’t stop to think if they should.

-Dr. Ian Malcolm

So, should we hand off coding to "AI" (LLMs specifically). I say "yes", for several reasons:

- Software code complexity and interdependency has already reached a point where programmers often encounter scenarios where things work without them fully understanding the how or why.

- Many/most people find it easier to articulate desired end goals than perfectly recalling and executing methods and procedures without a great deal of practice.

- As coding language and vulnerability complexity increases, cognitive load on programmers also increases, eventually causing intellectual burnout.

- The never-ending discovery of new vulnerabilities and malware makes it hard for humans to keep up on best coding practices without suffering information overload.

- Updating system prompts and LLM datasets can be done faster than training multiple people.

- Automating the code revision process should produce time savings that can be used for pre-release code function testing in simulated hardware/software environments.

- LLMs can be used to guarantee human readable comments and error/log messages exist in the code, which humans might omit or fail to do properly.

- People are already doing it due to the productivity gains - large scale coding benefits too much to revert to human only coding.

Are there plenty of pitfalls and issues we can't even foresee? Absolutely. But it's also disingenuous to think anyone can stop AI coding from being a thing. There's just too many incentives for people to use LLMs to code, especially for people who are better at logic than the specifics of coding/programming. The best we can do is create a process that generates secure by design code and proliferate it as far and wide as possible.