CyberSecurity Project: Transparent Filtering Bridge (+ Extras) 9.2

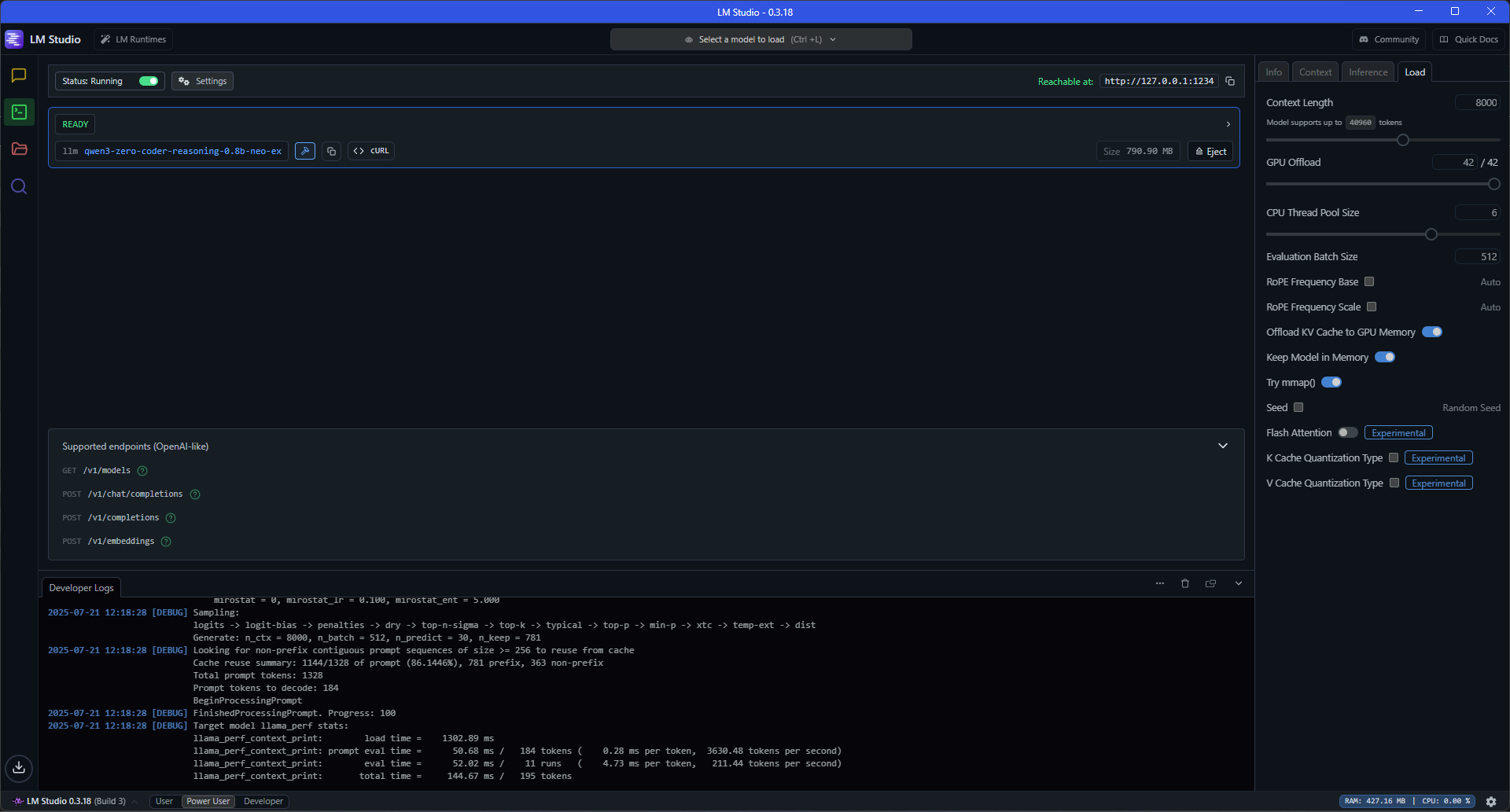

In article 9.0, we got a log dump of ~1.5 weeks worth of blocked connections through the Transparent Filtering Bridge. Today, we're going to analyze that log with the help of ChatGPT, but first, we're going to do some more tests of Qwen3-Zero-Coder-Reasoning-0.8B-Neo-Ex from article 9.1.

Table of Contents

Qwen3-Zero-Coder-Reasoning-0.8B-Neo-Ex for PowerShell

In our previous test of this model, it proved absolutely incapable of generating code anywhere near ChatGPT or Copilot's level. At best, when tasked with updating a script, it doesn't do a great job of figuring how to improve the code.

But how does it do when it's just given an objective?

Well, I gave the model these prompts from article 9.0.

Prompt 1:

Write me a powershell script that does the following:

-reads content of file `filter.log`

-reads lines of the following format `2025-06-25T11:06:30-04:00 Informational filterlog 68,,,ff197ebab7ec0da7fe008f0f6ffee42b,bridge0,match,block,out,4,0x0,,127,21852,0,DF,6,tcp,52,Home IP,1.1.1.1,21124,443,0,S,2052234833,,64240,,mss;nop;wscale;nop;nop;sackOK`

-counts unique and duplicate IPv4 addresses

-identifies unique IPv4 addresses

-outputs unique IPv4 address count, duplicate IPv4 address count, and all unique IPv4 addresses to new file `filter-cleaned.log`

Prompt 2:

Write me a powershell script that does the following:

-reads content of file `filter.log`

-reads lines of the following format `2025-06-25T11:06:30-04:00 Informational filterlog 68,,,ff197ebab7ec0da7fe008f0f6ffee42b,bridge0,match,block,out,4,0x0,,127,21852,0,DF,6,tcp,52,Home IP,1.1.1.1,21124,443,0,S,2052234833,,64240,,mss;nop;wscale;nop;nop;sackOK`

-counts the number of `in` and `out` entries

-generates a count of how many times unique IPv4 addresses appear in the log

-Generates a list of how many unique IPv4 addresses are associated with `in` or `out` entries, or both -outputs all data to new file `filter-statistics.log`

So here's what it produced for prompt 1:

# Read the input file

$InputFile = "filter.log"

$Lines = Get-TextFile($InputFile)

# Initialize data structures for unique IP addresses and frequency counts

uniqueIPs = @()

duplicateIPs = @()

# Process each line to extract relevant information

foreach ($Line in $Lines)

{

# Split the line into fields using commas

$Fields = $Line.Split(",")

if ($Field.Count -eq 1) {

continue

}

# Extract the "mss" field and convert it to a string

$IPs = $Fields[2]

# Convert the IP address to a string for filtering

$IP = "$($IPs.ToString())"

# Add the unique IP address and frequency count

if ($uniqueIPs.ContainsKey($IP))

$duplicateIPs.Add((New-Entry -Key=$IP, Count=1)

else

$uniqueIPs[$IP] = 0

# Add the duplicate counts to a separate list

if (DuplicateIPs.ContainsKey($IP)) {

DuplicateIPs[$IP] += 1

} else

DuplicateIPs[$IP] = 1

}

# Write results to new file

$NewFile = "filter-cleaned-qwen.log"

Output-Text("Unique IP Addresses Count:", UniqueIPs.Count)

Output-Text("Duplicate IP Count: ", DuplicateIPs.Keys.Count)

Output-Text("Total Unique IPs: ", UniqueIPs.Keys.Count, " | Duplicates: ", DuplicateIPs.Keys.Count)

Output-Text("Unique IPs: ", UniqueIPs.Keys, " with duplicates: ", DuplicateIPs.Keys, "\n")

# Write the results to the new file

foreach ($key in UniqueIPs.Keys)

{

Output-Text("$key (Unique): Count: $DuplicateIPs[$key] | Total: $UniqueIPs[$key]\n")

}Just looking at the code, one massive red flag is the fact that it isn't trying to pattern match the IPv4 structure. Instead, it seems to be trying to extract the content from the CSV part of the log entry, and I'm not sure it even picked the right portion.

Tossing it into DiffChecker with the code I generated from ChatGPT revealed something astounding - 18 lines worth of extra code in this script. Most of it seems related to sorting and writing values, but it shows a great deal of inefficiency in the code.

I had no great expectations when I tried running the code, but it errored out and produced no output, so I can conclusively say that a sub-1B parameter model is likely to be absolute garbage when it comes to coding.

Data Analysis Prompting

As I have pointed out in previous articles, anything related to AI is only as good as the system and user prompts. LLMs are very much Garbage In, Garbage Out machines, with the possibility of rapid iteration to get you from garbage to usable content.

To design this prompt, I will use the MOSCOW method, where I explicitly call out Must Have, Should Have, Could Have, and Won't Have features of this prompt:

| Must Have | Should Have | Could Have | Won't Have |

|

|

|

|

Here's the prompt I created:

Analyze the uploaded pfsense style log. Follow these directives in your analysis:

- Run all analysis operations 3 times to validate findings.

- Do NOT produce results that have not been validated.

- Do NOT produce results based on data from outside the log.

- Do NOT produce results that are not based on data in the log.

- Ensure all results are written in plain English.

- Associate IP addresses and Ports in the following format: [IP Address]:[Port]

- Explain any and all recommendations for IPs to examine further.

Highlight patterns in the data, including times and frequency of connections.Here's the conversation with ChatGPT, which shows some fascinating behavior as it iterates its way into coming up with a Python script to do the data analysis.

To validate that the data is not hallucinated, I opened the log file in VS Code and searched for the dates and IP addresses. I found that the quantities of log entries were correct, and that the IP addresses associated with the ports did exist... But the script mistakenly associated the port number in the column immediately after the destination IP with that IP.

It is somewhat curious that it decided that 5 was an appropriate number of entries for each list, but this is something that could be expanded on in a revision of the prompt.

Examining the Findings

The findings were as follows:

The five busiest minutes (timestamp at minute precision) are:

2025‑07‑15 09:04 — 232 entries

2025‑06‑17 09:59 — 189 entries

2025‑07‑03 19:09 — 132 entries

2025‑06‑17 09:58 — 125 entries

2025‑06‑28 16:40 — 122 entries

The top five most frequently seen IP:Port pairs (across all entries) are:

13.107.253.41:55731 — 19 occurrences

34.107.243.93:60753 — 18 occurrences

54.84.219.196:52647 — 18 occurrences

3.218.230.195:57497 — 18 occurrences

44.215.87.86:59136 — 18 occurrences

Of the five IP addresses it highlighted, 4 were considered to be benign by both VirusTotal vendor analysis and the community. 34.107.243.93 was considered benign by vendors, but members of the community flagged it as potentially malicious. It might also be associated with Mozilla Firefox and the push.services.mozilla.com domain.

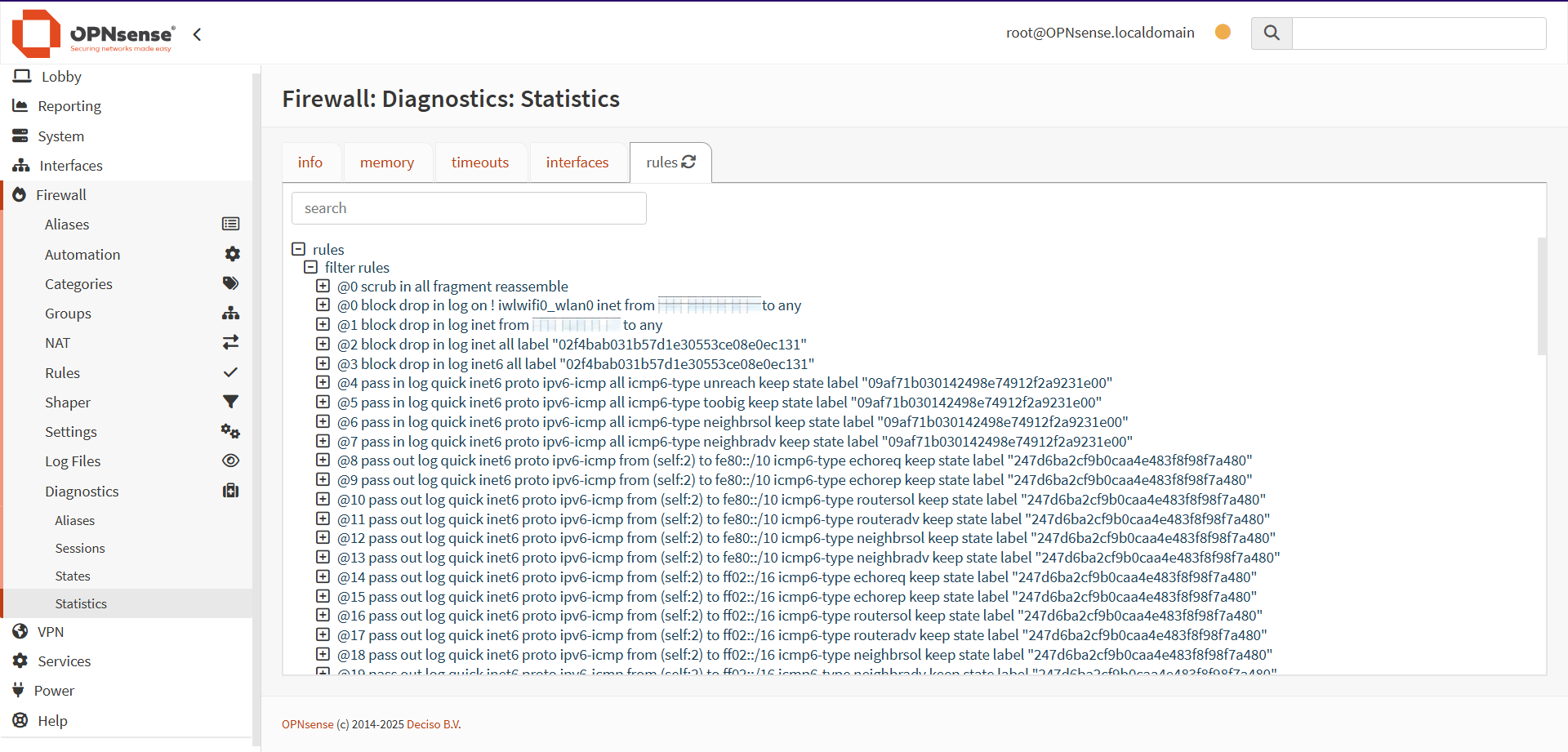

Unfortunately, there's not much to glean from the rule that's blocking all these IPs:

This is one of OPNsense's automatically generated rules rules, but there is no way for me to figure out which one it is, because I can't open the actual rules at the bridge interface GUI. I would have to dig around for log entries and maybe find enough information there to decipher which rule is being applied.

Unfortunately, it seems the same rule applied to the 2025‑07‑15 09:04 time window.

Checking the IP addresses, I started at the top - so chronologically last in that time window, with 67.195.176.151. Coming in at 63 of 232 entries, this is all traffic on port 993, secure IMAP, so it's related to e-mail. It is not particularly clear why I got so much mail traffic on that day, even looking at my e-mail inboxes.

Next up is 3.229.151.51, which is an HTTPS connection to an Amazon IP address, which could be just about anything.

3.223.181.245 is another Amazon IP address, but this might be an IP that does scans of people's systems.

Coming in at 46 entries, 38.34.185.21 is somewhat interesting, since it is associated with a VPN, a Japanese IP range, and has at least one Suspicious analysis on VirusTotal. This could just be a VPN connection to get around a region lock though.

34.249.225.129 is yet another Amazon IP, but this one is not only from Ireland, but has a MalwareURL analysis saying it is Malware. The last analysis was done 3 days ago, but with no indication of which analysis was done, it is hard to tell if this was a compromised IP in the past or is currently compromised.

24.49.122.85 is a mildly interesting, because it's an incredibly non-descript IP address. Even looking at the WHOIS information revealed very little. Google's own search AI Mode purports that it is a Google IP address:

The IP address "24.49.122.85" belongs to the public IP address range. It is associated with Google LLC, located in Mountain View, California. More specifically, it is identified as a search engine spider or a component within Google's infrastructure.

-Google AI Mode:12:43 PM, 7/23/2025

With 16 entries, 172.183.7.193 is a Microsoft server of some sort. Bizarrely, despite this being the kind of thing that would logically be expected to be blocked by a tracking rule, it was caught by rule 2. Examining the WHOIS information on this IP reveals that it is for "Microsoft Routing, Peering, and DNS".

Next up are a pair of solo Akamai IP addresses, 184.84.138.181 and 184.84.136.40. WHOIS look up revealed nothing of real value, and neither address has any real analysis on VirusTotal, which is slightly suspicious.

At 11 entries, 23.50.112.217 is another Akamai IP, although from Akamai International.

With 3 entries each, 54.167.177.211 and 15.197.243.254 are another set of Amazon IPs.

At 10 entries, 71.74.45.223 is an IP related to my ISP, which may or may not be related to steaming app use.

Coming in with 20 entries is 3.212.87.58, 98.82.158.136 with 1 connection, 3.232.210.51 with 13, 98.82.159.50 with 17, and was another Amazon IP.

109.176.239.70 is interesting, because it's a Hack the Box IP with 11 entries. With 42 entries, 140.82.113.25 is a Github IP address - I guess I was busy doing some uploads or repo management! 140.82.114.26 is another Github IP, with 35 entries.

With 71 connections, 172.183.7.194 is a Microsoft IP of some sort.

At 6 entries, 165.22.185.144 is a DigitalOcean IP. DigitalOcean is a digital infrastructure company with a somewhat checkered reputation, so this is definitely an interesting finding.

45.79.197.58 is another Akamai IP with 44 entries.

162.159.130.232 has six entries; with three,172.64.145.202. Both are Cloudflare IPs.

Overall, it's pretty clear that the Alias lists I have are insufficient in terms of filtering traffic. Since the default rules are lower in the execution order than the custom rules I have generated, they are a sign that not a lot of traffic is triggering the rules targeting the IPs from the various DNS blocklists.

Next Steps

The first obvious step is to get blocklists focused on IP addresses. This will help narrow down the blocked connections and better identify potential threat traffic.

Second is to refine the prompt for the log analysis. Since the first attempt was quite good at avoiding hallucinations, the next one is just an iterative improvement:

Analyze the uploaded pfsense style log. Follow these directives in your analysis:

- pfsense log column values: Rule Number, Sub‑rule Number, Anchor, Tracker ID, Real Interface, Reason, Action, Direction, IP Version, TOS, TTL, IP ID, Fragment Offset, IP Flags, Protocol ID, Protocol Text, Packet Length, Source IP, Destination IP, Source Port, Destination Port, Data Length, TCP Flags, Sequence Number, Acknowledgment Number, Window Size, Urgent Pointer, TCP Options

- Run all analysis operations 3 times to validate findings.

- Do NOT produce results that have not been validated.

- Do NOT produce results based on data from outside the log.

- Do NOT produce results that are not based on data in the log.

- Ensure all results are written in plain English.

- Associate IP addresses and Ports in the following format: [IP Address]:[Port]

- Explain any and all recommendations for IPs to examine further.

- Generate results in groups of 10 entries.

Highlight patterns in the data, including times, frequency of connections, protocol IDs, packet length.Third is to collect a stable set of data from after the OPNSense 25.7 update, which naturally caused a whole bunch of problems. Solving these problems required a number of reboots and service restarts, which pollute the data a fair bit. Therefore, new data will be collected, and then comparisons to the existing data can be made.