CyberSecurity Project: Transparent Filtering Bridge (+ Extras) 9.0

It's been a bit over two weeks, so it's time to check in on the Transparent Filtering Bridge. Having gotten the hardware to finally have a functioning management connection, I left the device to do its job, filtering traffic as it passed between the modem and primary router of my mesh network. With a mixture of firewall and Suricata rules in place, what I'm primarily looking for is what, if any, rules have been triggered.

Table of Contents

For Some Reason, Config Changes

One thing that happened between the last entry and now was an automatic Windows update, which closed out my browser, where I had the OPNSense webGUI tab open. This seemed to be helping maintain the connection to device, which would sometimes intermittently fail. I also had a cron job set to reset the interface every 6 hours to address the issue.

However, when I tried accessing OPNSense webGUI to start this article, I got connection errors.

So I connected a keyboard and mouse to the miniPC, turned on the monitor, and saw that there was no IP address on the console. Attempting to reset the services wound up freezing on the OPT1 interface (the WiFi), so I cancelled out of that. Then I restarted the miniPC, which brought the IP address back to the interface, but no actual web connectivity.

This meant it was time to dig into the /usr/local/etc/rc.d/wpa_supplicant file again, which is where I made an interesting discovery. At some point, for some reason, changes had been made to the file. My best guess was that this occurred after the installation of the WiFi firmware, but it's unclear why that would change some comment lines and strip the rcvar value.

Since the rcvar was what told the machine to enable the WiFi authentication, this explained the WiFi connection issues.

After making minimal changes to the file (returning the comment values to their original values and fixing the missing rcvar value), I reset the WiFi service and regained connectivity.

Tweaking the Cron Job Timer

Due to the unusual nature of the wpa_supplicant file changes, I decided to reduce the time gap between WiFi interface resets from 6 hours to 4. This would give me more opportunities to retain or regain WiFi access should connection issues continue.

Not even an hour after I got access back, the WiFi connection went down, which proved this was a smart decision.

In a major irritation, the WiFi connection seemed to be failing silently a few minutes after being reset, making it hard to interact with the webGUI. In a bizarre situation, I managed to access a specific page of the webGUI, despite not being able to reach the webGUI in general. Even more annoying was the fact that I managed to randomly get access by refreshing the tabs for the webGUI.

More experimentation (and possibly FreeBSD kernel updates) is clearly needed to resolve this issue.

Checking the Firewall Logs

After updating the cron job configuration, the first thing I did was check the firewall logs.

One of the more irritating aspects of OPNSense is that all firewall logs seem to be seem priority level - Informational. This means that any rule that passes or blocks traffic generates a log in that same level, meaning it is very easy to get lost in all the data. Luckily, the provided search option is quite effective - it was easy to get just the logs of blocked connections on the bridge interface.

(Incidentally, it seems logical to me that someone running a firewall would put logging of blocked traffic at a higher log level.)

Since these logs were all in a machine readable format that I had no experience with, I decided to download a 50 entry log for analysis with ChatGPT and other tools. The first thing I did was sanitize any of my personal IP addresses.

Here is an example of a block coming in:

2025-06-17T09:58:31-04:00 Informational filterlog 2,,,02f4bab031b57d1e30553ce08e0ec131,bridge0,match,block,in,4,0x0,,238,27873,0,DF,6,tcp,71,3.77.205.84,Home IP,443,52742,31,PA,4127654128:4127654159,2661296198,118,,

For contrast, a block going out:

2025-06-25T11:06:30-04:00 Informational filterlog 68,,,ff197ebab7ec0da7fe008f0f6ffee42b,bridge0,match,block,out,4,0x0,,127,21852,0,DF,6,tcp,52,Home IP,1.1.1.1,21124,443,0,S,2052234833,,64240,,mss;nop;wscale;nop;nop;sackOK

The bits and pieces that I can read without assistance is mostly the interface information, the fact that the rule matched, the action taken, direction of the traffic, protocol, port, and IP addresses.

Annoyingly, there's no way to get a simplified view that includes the rule name/description, which would make identifying what is going on much easier.

To figure out what the logs are truly saying, I provided ChatGPT with the above entries and the following query:

In plain english, explain the OPNSense firewall log format based on these two example entries:

ChatGPT's response was as follows, omitting the Timestamp, Severity, and Process information:

Following that, the comma‑separated values represent fields defined by pf’s raw filter log format Netgate Documentation:

Rule Number (

2/68): The index of the firewall rule that matched.Sub‑rule Number (blank): Secondary rule index if using nested rules.

Anchor (blank): Name of the anchor (rule set) containing the rule.

Tracker ID (

02f4ba…/ff197e…): Unique flow‑tracking identifier.Real Interface (

bridge0): The actual network interface the packet traversed.Reason (

match): Why it was logged (typicallymatch).Action (

block): What the firewall did (passorblock).Direction (

in/out): Traffic direction relative to the interface.IP Version (

4): Internet Protocol version (4or6).TOS (

0x0): Type‑of‑Service byte from the IP header.ECN (blank): Explicit Congestion Notification bits.

TTL (

238/127): Time‑to‑Live (IPv4) or Hop Limit (IPv6).IP ID (

27873/21852): Packet identification number.Fragment Offset (

0): Position within fragmented packet.IP Flags (

DF): IP fragmentation flags (DF,MF, ornone).Protocol ID (

6): Numeric protocol identifier (e.g.,6=TCP,17=UDP).Protocol Text (

tcp): Human‑readable protocol name.Packet Length (

71/52): Total size of the IP packet.Source IP (

3.77.205.84/Home IP): Originating IP address.Destination IP (

Home IP/1.1.1.1): Target IP address.Source Port (

443/21124): Originating port (for TCP/UDP).Destination Port (

52742/443): Target port (for TCP/UDP).Data Length (

31/0): Payload size (excluding headers).TCP Flags (

PA/S): TCP control flags (e.g.,S,PA,F).Sequence Number (

4127654128/2052234833): TCP sequence.Acknowledgment Number (

4127654159/ blank): TCP acknowledgment.Window Size (

2661296198/64240): TCP receive window.Urgent Pointer (

118/ blank): Urgent data pointer if set.TCP Options (blank /

mss;nop;wscale;nop;nop;sackOK): Negotiated TCP options.These fields allow you to reconstruct packet details, rule decisions, and flow context directly from the log entries.

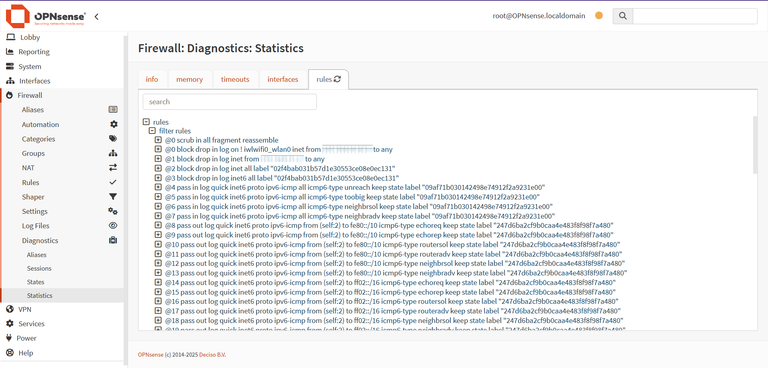

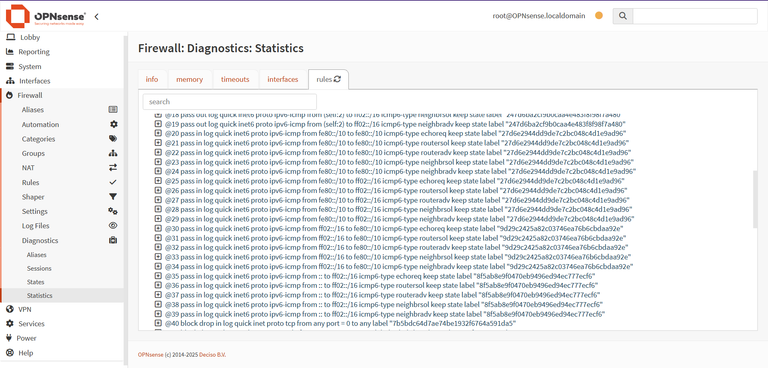

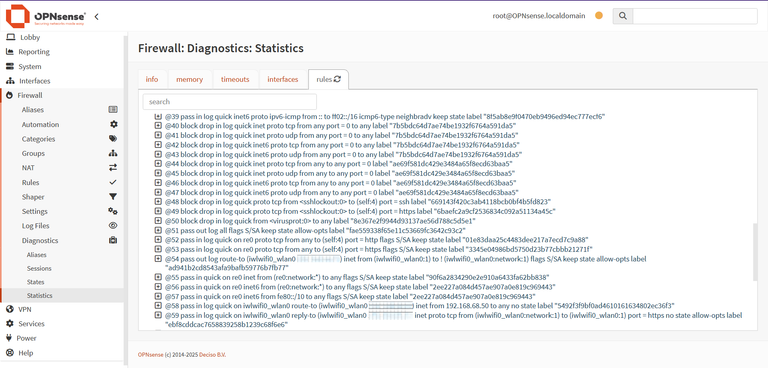

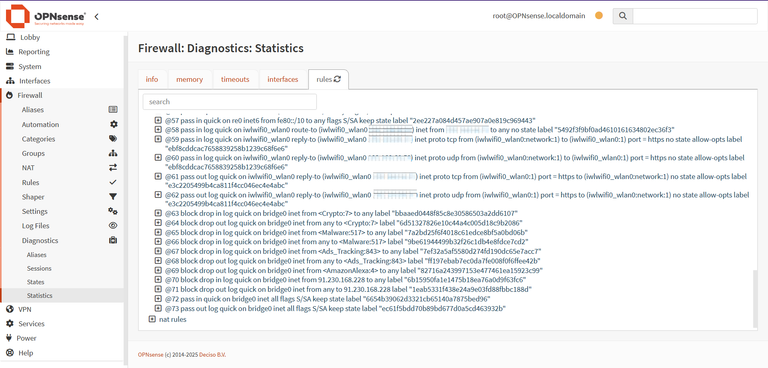

In another showcase of poor UXUI decisions, the way to correlate rules and the rule numbers in webGUI is to access an obscure page, one most users will never think to look at. This is somewhat forgivable due to the numbers themselves being non-permanent assignments, based on the last time the system was rebooted/reloaded. But it is suboptimal UX.

Unfortunately, rule 2 was fairly incomprehensible, likely due to being a default rule: @2 block drop in log inet all label "02f4bab031b57d1e30553ce08e0ec131"

Rule 68, on the other hand, was much more understandable: @68 block drop out log quick on bridge0 inet from any to <Ads_Tracking:843> label "ff197ebab7ec0da7fe008f0f6ffee42b"

So that outbound connection was a (perceived) attempt to connect to an ad/tracking server, successfully showing that my Aliases and rules do work.

Filtering Out the Noise

Since I wanted to do some amount of inspection of these suspicious IPs, I then needed a way to filter my log for unique IPs. In addition, I would get data on the quantity of those uniques, as well as how many were duplicated.

To do this, I would use Microsoft Copilot to generate a PowerShell script to filter the data for me. The prompt was as follows:

Write me a powershell script that does the following:

-reads content of file `filter.log`

-reads lines of the following format `2025-06-25T11:06:30-04:00 Informational filterlog 68,,,ff197ebab7ec0da7fe008f0f6ffee42b,bridge0,match,block,out,4,0x0,,127,21852,0,DF,6,tcp,52,Home IP,1.1.1.1,21124,443,0,S,2052234833,,64240,,mss;nop;wscale;nop;nop;sackOK`

-counts unique and duplicate IPv4 addresses

-identifies unique IPv4 addresses

-outputs unique IPv4 address count, duplicate IPv4 address count, and all unique IPv4 addresses to new file `filter-cleaned.log`

This was the following output:

# PowerShell Script to Extract and Count IPv4 Addresses

```powershell

# Define input and output files

$inputFile = 'filter.log'

$outputFile = 'filter-cleaned.log'

# IPv4 regex (0–255 range)

$ipv4Pattern = '\b(?:(?:25[0-5]|2[0-4]\d|[01]?\d?\d)\.){3}(?:25[0-5]|2[0-4]\d|[01]?\d?\d)\b'

# Read all lines, extract every IPv4 match

$allIps = Get-Content $inputFile |

ForEach-Object {

[regex]::Matches($_, $ipv4Pattern) |

ForEach-Object { $_.Value }

}

# Compute counts

$uniqueIps = $allIps | Sort-Object | Get-Unique

$uniqueCount = $uniqueIps.Count

$duplicateCount = $allIps.Count - $uniqueCount

# Prepare header lines

$header = @(

"Unique IPv4 address count: $uniqueCount"

"Duplicate IPv4 address count: $duplicateCount"

""

"Unique IPv4 addresses:"

)

# Write header and unique IPs to the output file

$header | Set-Content $outputFile

$uniqueIps | Add-Content $outputFile

```

---

## How This Works

- The script reads every line from `filter.log` and applies a strict IPv4 regex to find address patterns.

- All matches are collected into `$allIps`, preserving duplicates.

- Unique addresses are extracted via `Sort-Object | Get-Unique`.

- Duplicate count is derived by subtracting the number of unique addresses from total matches.

- Results (counts + list of unique IPs) are written to `filter-cleaned.log`.

---

## Further Enhancements You Might Consider

- If you need the list of IPs that appear more than once, group `$allIps` with `Group-Object` and filter where `.Count -gt 1`.

- For very large logs, use `Select-String -Pattern $ipv4Pattern -AllMatches` to stream matches rather than loading the entire file at once.

- Extend the script to handle IPv6 by adding a second regex and interleaving results.

- Export the results in CSV or JSON for integration with monitoring tools or dashboards. I ran the script and got this confusing result:

Unique IPv4 address count: 877Duplicate IPv4 address count: 11597

Believing myself to have only gotten a sample size of 50, I began randomly searching the original log file for random IP on the unique list, only to keep finding them. The mystery was answered when I opened the log file in VS Code, revealing that I had dumped the entire list of log entries matching the blocked bridge query. I was, in fact, analyzing the full two or so weeks worth of log entries, not just the 50 entries on display in the webGUI.

To gain more higher level insights, I returned to Copilot and asked it for a new script:

Write me a powershell script that does the following:

-reads content of file `filter.log`

-reads lines of the following format `2025-06-25T11:06:30-04:00 Informational filterlog 68,,,ff197ebab7ec0da7fe008f0f6ffee42b,bridge0,match,block,out,4,0x0,,127,21852,0,DF,6,tcp,52,Home IP,1.1.1.1,21124,443,0,S,2052234833,,64240,,mss;nop;wscale;nop;nop;sackOK`

-counts the number of `in` and `out` entries

-generates a count of how many times unique IPv4 addresses appear in the log

-Generates a list of how many unique IPv4 addresses are associated with `in` or `out` entries, or both -outputs all data to new file `filter-statistics.log`

Here's what Copilot gave me:

```powershell

# PowerShell Script: filter-statistics.ps1

# Reads filter.log, parses “in”/“out” entries and IPv4s, produces filter-statistics.log

# Set input/output paths

$inputFile = 'filter.log'

$outputFile = 'filter-statistics.log'

# Initialize counters & storage

$inCount = 0

$outCount = 0

$ipStats = @{}

# Strict IPv4 regex

$ipv4Pattern = '\b(?:(?:25[0-5]|2[0-4]\d|[01]?\d?\d)\.){3}(?:25[0-5]|2[0-4]\d|[01]?\d?\d)\b'

# Process each line

Get-Content $inputFile | ForEach-Object {

$line = $_.Trim()

if ([string]::IsNullOrWhiteSpace($line)) { return }

# Split on commas

$fields = $line -split ','

# Skip malformed or too-short lines

if ($fields.Count -lt 20) { return }

# Extract direction and IP

$direction = $fields[7].ToLower()

$ip = $fields[19].Trim()

# Validate IPv4 format

if (-not [regex]::IsMatch($ip, $ipv4Pattern)) { return }

# Tally global in/out counts

switch ($direction) {

'in' { $inCount++ }

'out' { $outCount++ }

}

# Initialize per-IP record if needed

if (-not $ipStats.ContainsKey($ip)) {

$ipStats[$ip] = [PSCustomObject]@{

Total = 0

In = 0

Out = 0

}

}

# Update per-IP stats

$ipStats[$ip].Total++

if ($direction -eq 'in') { $ipStats[$ip].In++ }

if ($direction -eq 'out') { $ipStats[$ip].Out++ }

}

# Compute summary numbers

$uniqueIpCount = $ipStats.Keys.Count

$onlyInCount = ($ipStats.GetEnumerator() |

Where-Object { $_.Value.In -gt 0 -and $_.Value.Out -eq 0 }).Count

$onlyOutCount = ($ipStats.GetEnumerator() |

Where-Object { $_.Value.Out -gt 0 -and $_.Value.In -eq 0 }).Count

$bothCount = ($ipStats.GetEnumerator() |

Where-Object { $_.Value.In -gt 0 -and $_.Value.Out -gt 0 }).Count

# Build output lines

$output = @()

$output += "Total IN entries: $inCount"

$output += "Total OUT entries: $outCount"

$output += "Unique IPv4 addresses: $uniqueIpCount"

$output += "Unique IPv4 addresses only IN: $onlyInCount"

$output += "Unique IPv4 addresses only OUT: $onlyOutCount"

$output += "Unique IPv4 addresses both IN and OUT: $bothCount"

$output += ''

$output += "IPv4 address frequencies (desc):"

# Append per-IP frequencies

$ipStats.GetEnumerator() |

Sort-Object { $_.Value.Total } -Descending |

ForEach-Object { $output += ("{0}: {1}" -f $_.Key, $_.Value.Total) }

# Write everything to the output file

$output | Set-Content $outputFile

```

Save this as `filter-statistics.ps1` in the same folder as your `filter.log` file.

Run it in PowerShell—after completion, `filter-statistics.log` will contain:

- Total counts of “in” and “out” entries

- Number of unique IPv4 addresses

- How many unique addresses appear only in, only out, or in both directions

- A breakdown of each IPv4 address and how many times it occursThe results were mindboggling:

Total IN entries: 10301Total OUT entries: 108Unique IPv4 addresses: 643Unique IPv4 addresses only IN: 641Unique IPv4 addresses only OUT: 2Unique IPv4 addresses both IN and OUT: 0IPv4 address frequencies (desc):216.21.13.15: 840216.21.13.14: 84034.107.243.93: 31452.18.33.33: 24254.246.231.114: 21323.197.209.185: 19423.197.210.93: 16715.197.177.151: 1093.33.169.122: 10967.195.176.151: 1081.1.1.1: 106

First of all, the 840 entries each from 216.21.13.15 and 216.21.13.14 were quite alarming. The VirusTotal pages for each IP (216.21.13.15, 216.21.13.14) made it pretty clear that they were associated with malware. I immediately examined the logs, and saw these connections were blocked on the way in. In fact, the only outbound connections that were blocked were to 1.1.1.1 and a local network address.

It's unclear if there's actual malicious traffic coming from Cloudflare (1.1.1.1), or just malware sometimes routing through Cloudflare. Something similar might be happening with 34.107.243.93, which is an IP address that seems to be associated with Firefox, but might be used for any number of malicious activities.

The other addresses are just Amazon or Akami cloud IPs, which means they could be used by anyone for anything at any moment.

Something that's a bit concerning about these two scripts is the fact that the numbers don't line up, despite them taking in the same log, which was not edited between runs. Despite using the same LLM and same conversation, there was randomness in how it interpreted the results to generate a calculation that was slightly different.

Checking Up On Suricata

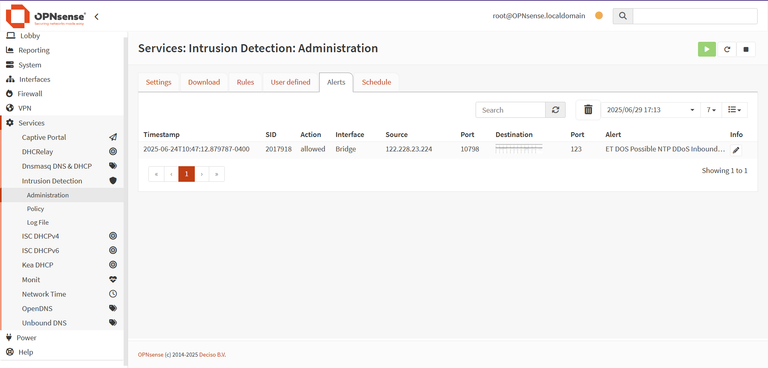

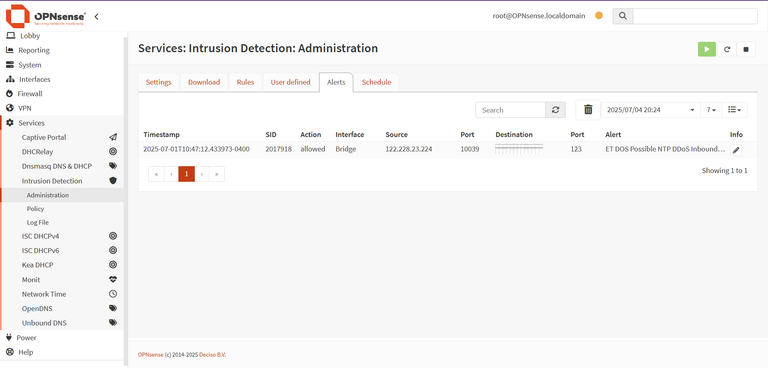

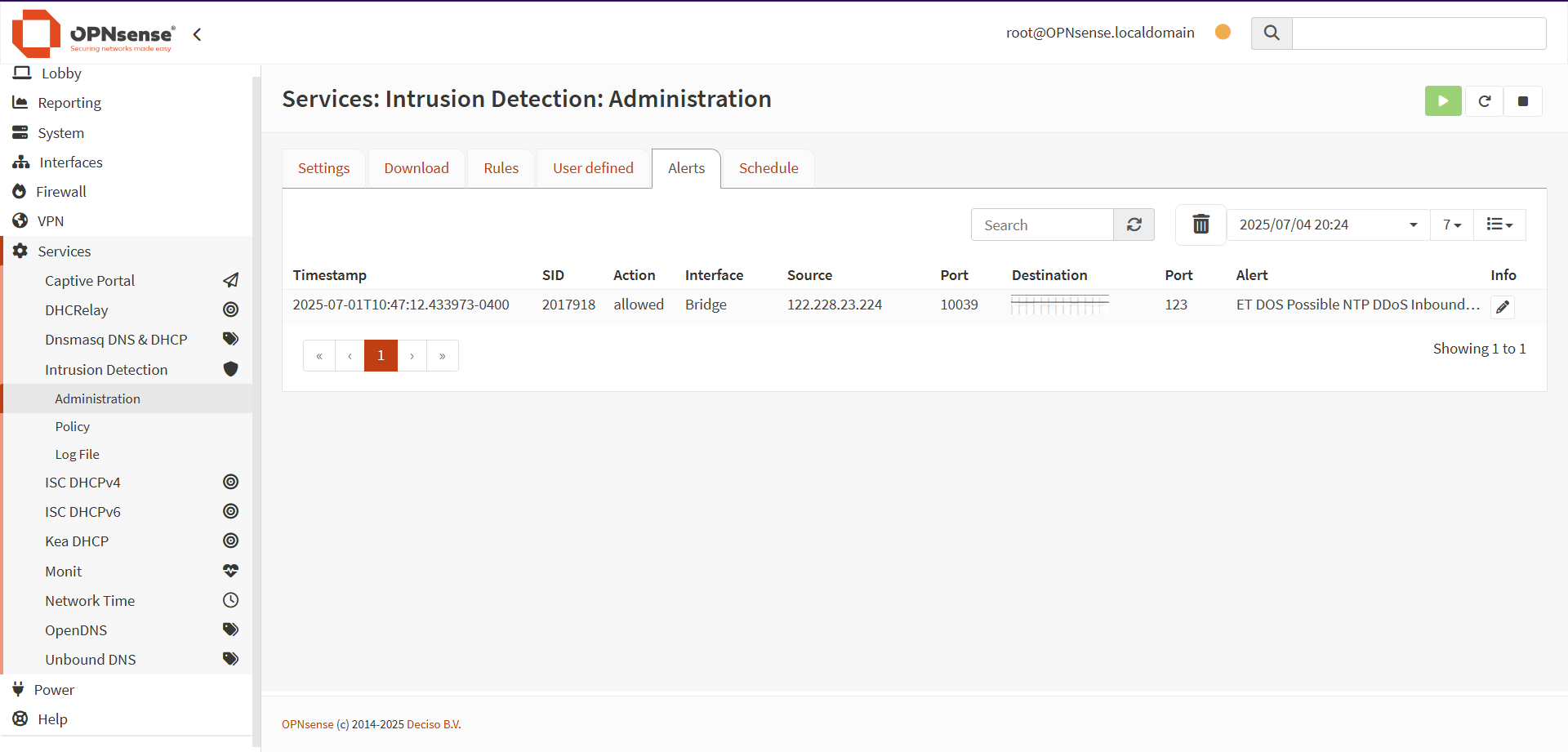

After obtaining the log data for the standard firewall, I then proceeded to check the Suricata logs. I didn't expect much from this, because as an IPS, it would really only activate if there was an active threat.

As it turned out, I was right and wrong. I was correct to assume little would be revealed, but I was wrong in that I did have two detections.

Curiously, they were both Network Time Protocol DOS scans, which were annoying allowed by Suricata.

Doing a VirusTotal check revealed that this was an actor from the People's Republic of China, which has done this type of thing for at least a year. Interestingly, one member of the VirusTotal community posted about escalating numbers of scans over a period of months, while I had basically two in a two week span.

What this really proved was the value of a multi-layered detection setup. The blocking firewall rules clearly did not include this IP address, but if I hadn't had Suricata, I wouldn't have known this even happened. It would be a total false negative, and if for some reason I got hit with a DDoS attack, I would have no warning or idea what was happening.

What To Do Next

Normally, there would be a clear path forward, but there's a few factors that complicate things:

- The inconsistent connectivity makes it hard to update and iterate things in a timely manner.

- Figuring out how to revise the

wpa_supplicantfile to fix the connectivity issues could take a great deal of time. - There's a major OPNSense version update scheduled for the end of the month, which may require me to do a lot of reconfiguration if I can't simply do an in-place update.

These all provide incentives to not engage in another round of data collection and analysis with the Transparent Filtering Bridge.

However, due to the fact that I do have the log for the period between these latest two posts, I can experiment with various methods for cleaning and analyzing the data while addressing the other issues.

Takeaways

- There are FreeBSD/OPNSense processes that can alter the key files and behaviors required for me to maintain a wireless management connection.

- Testing firewall blocking rules aimed at malicious/tracking traffic is more effective over a longer period of time (24+ hours).

- In a Small Office/Home Office environment, Suricata IPS detections may be low/nonexistent, even over a prolonged period of time, depending on your configuration.

- The pfSense firewall log format is quite complex and hard to analyze for humans, but is incredibly information dense and ideal for machine analysis.

- At minimum, in a little over a week and a half, I blocked hundreds of malicious or tracking connection attempts coming into my network, and only two going out.

- In the same time span, I received two NTP scans that might be precursors to DOS attacks from actors in the PRC.

- The NTP scans were not detected by the standard firewall, proving that multi-layer defenses are key to maintaining good cyber hygiene.