CyberSecurity Project: Transparent Filtering Bridge (+ Extras) 6.0

Last time on the transparent filtering bridge project, I bounced my head off of the progression blockers with setting up a WiFi management connection.

With that seemingly impassable unless there's a revision of either FreeBSD's Intel WiFi driver or how OPNsense handles some things, I've decided to pivot to another part of the project that doesn't require working hardware and software. This time, it's building alias IP address lists.

Table of Contents

What's an Alias?

The OPNsense documentation provides a simple, clear definition:

Aliases are named lists of networks, hosts or ports that can be used as one entity by selecting the alias name in the various supported sections of the firewall. These aliases are particularly useful to condense firewall rules and minimize changes.

So what am I going to put in these alias lists?

Well, as it turns out, a number of the Pi-Hole block lists I use have a lot of IP address entries. Since Pi-Hole doesn't block them, due to being a DNS black hole, that means it'll be OPNsense's job to block them.

How to Get the Aliases into OPNsense

Ironically, I stumbled onto a pretty simple solution for mass importation of alias entries while trying some more troubleshooting on the OPNsense connectivity issues.

As it turns out, someone on the OPNsense forums made a helpful Python script to populate the JSON file that handles all the alias lists from a CSV file. It is not clear to me why JSON format was chosen for something that could potential hold thousands of entries, versus a bulk data format like CSV, but it's just one of those design choices that end users have to live with.

The script is not very self-explanatory, so the author helpfully explained how to use it:

Steps:

- Install Python if you do not already have it

- Install any of the required Python modules if you don't have them (json, uuid, csv) - see below *

- Save the script as a *.py file

- Download your current list of Aliases from your OPNsense device: Firewall>Aliases>'download' (button bottom right of the Alias list) - save the file as 'opnsense_aliases.json'

- Create a CSV file called 'pfsense_alias.csv' with four columns called 'name', 'data', 'type' and 'description' in the first row, and which then contains your new Aliases, one per row (be sure the 'name' field meets the name constraints for Aliases)

- Update the two 'with open...' script lines to include the full path to each of the above files

- Run the script

- Upload the resultant json output file back into your OPNsense device: Firewall>Aliases>'upload' (next to the 'download' button)

What does this script do?

- It reads in the current list of aliases you downloaded from your device into a Python variable (dict)

- It then reads in the CSV file containing your list of new (additional) aliases, also into a Python dict

- It ADDS (appends) all the new aliases to the current list (no deletions, assuming you don't experience a uuid collision)

- It then saves the new expanded list of aliases over the previously downloaded alias json file

Obviously, you need Python and the required modules to run the script.

So, all I need to do is get the IP addresses into a properly formatted CSV, then I can upload that into OPNsense for later.

Getting the IP Addresses

Thankfully, Pi-Hole makes accessing the block lists incredibly easy... perhaps too easy:

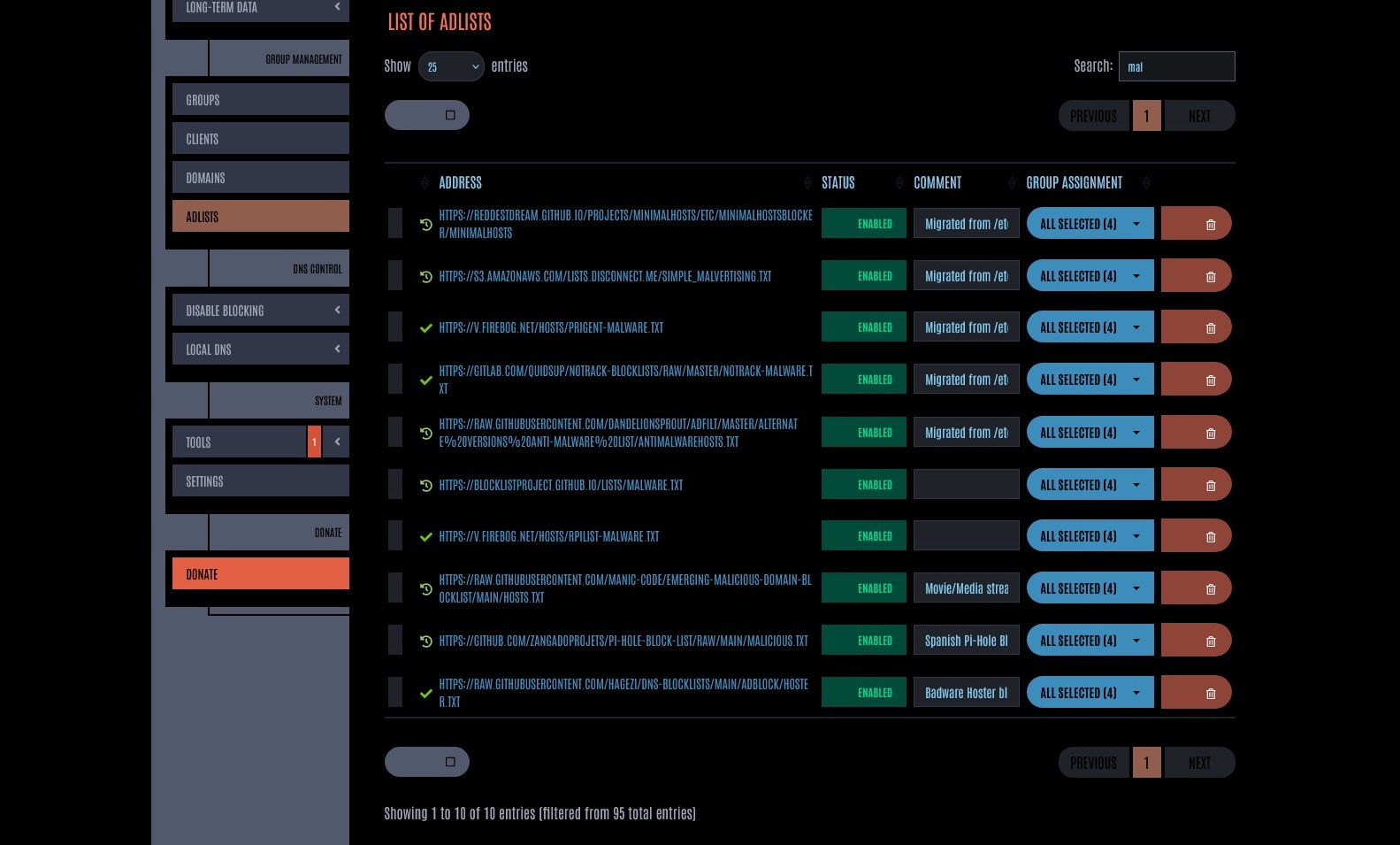

Why do I have nearly 100 block lists?

Well, to be honest, two main reasons:

- I don't like unified block lists, because they make troubleshooting harder and might block things I want.

- I don't mind a little extra CPU load due to redundant entries, versus the possibility of losing blocking due to block lists dying.

It's simply not practical to handle all those lists at the same time, so my plan is as follows:

- Break the scripts up into categories.

- Pick one category to use.

- Develop a script to pull the IP addresses out of that category's block lists.

- Once validated, clone the script for other categories.

- Fill out the CSV with the data for each category.

- Run the CSV-to-JSON script to convert the data for import.

Picking the Category

To start off the project, I wanted a category that had a reasonable amount of scripts. Ideally, a dozen or less, so that I could troubleshoot things if necessary without too many headaches.

As it so happened, my first choice, "malware", actually was a perfect fit for this task. Even with a partial match, there were only 10 lists, which I whittled down to 8. These are the lists I used for the script development process:

'https://v.firebog.net/hosts/Prigent-Malware.txt','https://gitlab.com/quidsup/notrack-blocklists/raw/master/notrack-malware.txt','https://raw.githubusercontent.com/DandelionSprout/adfilt/master/Alternate%20versions%20Anti-Malware%20List/AntiMalwareHosts.txt','https://blocklistproject.github.io/Lists/malware.txt','https://v.firebog.net/hosts/RPiList-Malware.txt','https://raw.githubusercontent.com/manic-code/Emerging-Malicious-Domain-Blocklist/main/hosts.txt','https://github.com/zangadoprojets/pi-hole-block-list/raw/main/Malicious.txt','https://raw.githubusercontent.com/hagezi/dns-blocklists/main/adblock/hoster.txt'

Second Time's the Charm

Initially, I wanted to make the script to pull IP addresses from these block lists with PowerShell. I've had good results using PowerShell to scrape logs for specific content before, so I thought I could do it with minimal issues.

I was wrong.

Here's the prompt I used with Microsoft Copilot:

Write me a powershell script that does the following:

-downloads files from a list of specified urls

-iterates through the downloaded files for IPv4 and IPv6 addresses, except for 0.0.0.0 or ::

-generates a new list of IP addresses

-removes duplicate items

-outputs list to text file cleaned-IPs

-provides full error reporting

-requires user to press enter to exit

What I got was a script that used regular expressions in a for-each loop to scrape the files for the IP addresses. That part worked. What didn't work, for no discernable reason, was handing off the array of scraped IPs to the rest of the script for clean up and writing to a text file.

Passing the script off to ChatGPT's Code LLM didn't help resolve the issue, so I decided to pivot into another direction.

Since I was already going to be using Python later the process, I figured that using Python the whole way through made sense. However, I decided to try out a local LLM coding model for the Python iteration of the script. This was to get a feel for how well it produces usable code, since it's a blazing fast model that can sometimes get your GPU to coil whine.

Here's the prompt I used with the Qwen2.5-Coder-7B-Instruct-GGUF model:

Write me a python script that does the following:

-downloads text files from a list of specified urls

-obtains IPv4 and IPv6 addresses from the files, except for 0.0.0.0, 127.0.0.1, or ::

-obtains the first instance of any ip address

-generates a new list of IP addresses

-outputs list to text file cleaned-IPs

-provides full error reporting

-provides logging throughout the whole process for troubleshooting

Here's the output it gave me:

Certainly! Below is a Python script that accomplishes all of the tasks you've described. This script uses `requests` to download files, `re` for regex operations to extract IP addresses, and `logging` for comprehensive error reporting and logging.

First, make sure to install the required packages:pip install requests

Here is the Python script:import requestsimport reimport logging# Setup logging configurationlogging.basicConfig(filename='download_ips.log', level=logging.DEBUG,format='%(asctime)s - %(levelname)s - %(message)s')def download_file(url):"""Downloads a file from the given URL and returns its content."""try:response = requests.get(url)response.raise_for_status() # Raises an HTTPError for bad responsesreturn response.textexcept requests.exceptions.RequestException as e:logging.error(f"Failed to download {url}: {e}")return Nonedef extract_ips(text):"""Extracts IPv4 and IPv6 addresses from the given text, excluding specified ones."""ip_regex = r"\b(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b|\b(?:[A-Fa-f0-9]{1,4}:){7}[A-Fa-f0-9]{1,4}\b"ip_pattern = re.compile(ip_regex)# Find all IP addressesips = ip_pattern.findall(text)# Filter out specified IPs and get the first instance of any IP addressfiltered_ips = set()for ip in ips:if ip not in ["0.0.0.0", "127.0.0.1", "::"]:if ":" in ip: # IPv6if len(filtered_ips) < 1 or ":" in list(filtered_ips)[0]:filtered_ips.add(ip)else: # IPv4if len(filtered_ips) < 1:filtered_ips.add(ip)return list(filtered_ips)def save_ips_to_file(ips, output_file):"""Saves the list of IP addresses to a text file."""try:with open(output_file, 'w') as f:for ip in ips:f.write(f"{ip}\n")logging.info(f"IPs saved to {output_file}")except IOError as e:logging.error(f"Failed to save IPs to file: {e}")def main():urls = ["http://example.com/file1.txt","http://example.com/file2.txt"# Add more URLs here]output_file = 'cleaned-IPs.txt'try:all_ips = []for url in urls:logging.info(f"Downloading file from {url}")content = download_file(url)if content is not None:extracted_ips = extract_ips(content)all_ips.extend(extracted_ips)logging.info(f"Extracted {len(extracted_ips)} IP(s) from {url}")unique_ips = list(set(all_ips))logging.info(f"Total unique IPs: {len(unique_ips)}")save_ips_to_file(unique_ips, output_file)except Exception as e:logging.error(f"An unexpected error occurred: {e}")if __name__ == "__main__":main()

Explanation:

1. Logging Configuration: The script sets up basic logging to capture all log messages in a file named `download_ips.log`. Log levels include DEBUG, INFO, WARNING, ERROR, and CRITICAL.

2. Download File: The `download_file` function fetches the content of a URL using `requests.get()`. If there's any issue with downloading (e.g., network error), it logs an error message.

3. Extract IPs: The `extract_ips` function uses regular expressions to find all IPv4 and IPv6 addresses in the text. It then filters out specified IP addresses (`0.0.0.0`, `127.0.0.1`, and `::`) and ensures that only one instance of each IP address type is kept.

4. Save IPs: The `save_ips_to_file` function writes a list of IP addresses to a text file, one per line.

5. Main Function: This function orchestrates the entire process:

- Downloads files from specified URLs.

- Extracts and cleans IP addresses.

- Saves the cleaned list to a file.

The script provides detailed logging throughout the process for troubleshooting and error reporting. Adjust the `urls` list with your actual file URLs as needed.

This script actually produced results on the first try! However, it was a strange result - only 6 IP addresses.

During my attempts to troubleshoot the PowerShell script, I visually confirmed that there were hundreds (at minimum) of IPv4 addresses in a single block list:

Querying the same local model with the following prompt didn't really help resolve the problem:

Modify the script to do:

-read lines like 127.0.0.1 173.208.96.42

-obtain the part that is not 127.0.0.1

So I pivoted to Copilot to see if it could identify and solve the issue:

tell me why the following python code cannot retrieve ipv4 addresses from lines starting with 127.0.0.1 or 0.0.0.0:

# Filter out specified IPs and get the first instance of any non-127.0.0.1 IP addressfiltered_ips = set()for ip in ips:if ip not in ["127.0.0.1"]:if ":" in ip: # IPv6if len(filtered_ips) < 1 or ":" in list(filtered_ips)[0]:filtered_ips.add(ip)else: # IPv4if len(filtered_ips) < 1:filtered_ips.add(ip)return list(filtered_ips)

The Copilot response:

The given Python code is designed to extract IPv4 and IPv6 addresses while excluding `127.0.0.1`. However, it does not address the exclusion of `0.0.0.0`, and there are also some logical issues in the way it filters and adds IP addresses to the `filtered_ips` set. Here’s a detailed breakdown of why it might not be working as expected:

1. Regular Expression Issue:

- The regular expression correctly matches both IPv4 and IPv6 addresses, but the filtering logic for exclusion happens later in the code.

2. Filtering Logic Issue:

- The code currently only excludes `127.0.0.1` but does not exclude `0.0.0.0`.

- The logic for adding IPv4 addresses to the `filtered_ips` set checks if `filtered_ips` is empty (`len(filtered_ips) < 1`). This means only the first non-excluded IP will be added, which is not the desired behavior.

3. Set Operations:

- Using a set to store IP addresses is fine, but the logic should be adjusted to properly handle all matching IPs.

To fix these issues and ensure that all valid IPs are extracted while excluding `127.0.0.1` and `0.0.0.0`, you can revise the code as follows:import redef extract_ips(text):"""Extracts IPv4 and IPv6 addresses from the given text, excluding specified ones."""ip_regex = r"\b(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b|\b(?:[A-Fa-f0-9]{1,4}:){7}[A-Fa-f0-9]{1,4}\b"ip_pattern = re.compile(ip_regex)# Find all IP addressesips = ip_pattern.findall(text)# Filter out specified IPsexcluded_ips = ["127.0.0.1", "0.0.0.0"]filtered_ips = {ip for ip in ips if ip not in excluded_ips}return list(filtered_ips)# Example usagetext = """Here are some IPs:192.168.1.1, 127.0.0.1, 0.0.0.0, 8.8.8.82001:0db8:85a3:0000:0000:8a2e:0370:7334, ::1"""print(extract_ips(text))

Changes made:

1. Added `0.0.0.0` to the list of excluded IPs.

2. Simplified the filtering logic to directly exclude unwanted IPs using a set comprehension.

This revised code will now correctly extract all valid IP addresses while excluding both `127.0.0.1` and `0.0.0.0`. Let me know if you need any further adjustments or explanations!

Inserting the refined code segment into the script solved the problem, and I extracted a total of 3642 IPv4 addresses. Annoyingly, a single 0.0.0.0 address made it through, but that was a small price to pay.

Refining the Process

After proving out the script, I went back to Pi-Hole and cleaned up the descriptions to provide better context and help sort things for future searches.

Once that was done, I had to wait for a low traffic time to force Pi-Hole to update the blocklists. This would provide me with a way to narrow the block lists I included in my scripts to those that had IP addresses. Most blocklists are DNS entries only, which means they don't need to be scraped, which saves on processing power and time.

The upside of this was that there weren't too many lists that had non-domain entries, and three of them had obvious IP addresses displayed. That left a total of five lists to scrape for IPs. So I made a copy of the script, inserted each one individually, and scraped them.

Once that was done, I commented out the block list URL and added a comment with how many IP addresses were present. To my surprise, Easylist had easily over a hundred entries, which wasn't apparent .

As a result, I only needed three alias categories:

- Ads + Tracking

- Crypto

- Malware

Validating Some Results

Having run the Malware version of the script earlier, I was surprised to see so few results for malware related blocklists during the update. So I reran the script, commenting out a list each time to find out which lists had IP addresses.

Examining these blocklists revealed that these were coming from DNS entries with embedded IP addresses in them.

Running the IP address through VirusTotal revealed that this was a Google cloud IP. How exactly it was involved in malware distribution was unclear, but IP address itself was given a clean bill of health.

After this false positive, I decided to check out the results with other blocklists. I started with the Tencent blocklist, since that had a number of non-DNS entries. I got a list of IP addresses... and none of them were in the actual blocklist. I am not sure how or why these were generated, because I can find various numbers that are in the IPs, but not actual IP addresses.

A second blocklist had this same issue, so I went back to Copilot and asked it to revise the code to include what line each IP address was found on:

Sure, here's a revised version of your Python code. This version logs the specific lines where each IP address was found:

import reimport logginglogging.basicConfig(filename='ip_log.log', level=logging.INFO)def extract_ips(text):"""Extracts IPv4 and IPv6 addresses from the given text, excluding specified ones."""ip_regex = r"\b(?:(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\.){3}(?:25[0-5]|2[0-4][0-9]|[01]?[0-9][0-9]?)\b|\b(?:[A-Fa-f0-9]{1,4}:){7}[A-Fa-f0-9]{1,4}\b"ip_pattern = re.compile(ip_regex)# Find all IP addressesips = []for line_num, line in enumerate(text.split('\n'), start=1):found_ips = ip_pattern.findall(line)for ip in found_ips:ips.append((ip, line_num))# Filter out specified IPsexcluded_ips = ["127.0.0.1", "0.0.0.0"]filtered_ips = [(ip, line_num) for ip, line_num in ips if ip not in excluded_ips]return filtered_ipsdef save_ips_to_file(ips, output_file):"""Saves the list of IP addresses to a text file."""try:with open(output_file, 'w') as f:for ip, line_num in ips:f.write(f"{ip} found at line {line_num}\n")logging.info(f"IPs saved to {output_file}")except IOError as e:logging.error(f"Failed to save IPs to file: {e}")# Example usage:text = """Sample text with IP addresses.192.168.1.1 is one example.Another example is 2001:0db8:85a3:0000:0000:8a2e:0370:7334.Let's exclude 127.0.0.1 and 0.0.0.0."""ips = extract_ips(text)save_ips_to_file(ips, 'output_ips.txt')In this revised code:- The `extract_ips` function now returns a list of tuples, where each tuple contains an IP address and the line number it was found on.- The `save_ips_to_file` function writes the IP addresses and their corresponding line numbers to the output file.- Each line where an IP address was found is written to the log.

Oddly enough, this did provide the lines for the IPs, but those lines in the files on Github were for domains, not IP addresses. Even more confusing was running this revised script on the EasyList blocklist, which provided IPs actually specified in the list.

Why this is happening is beyond my programming knowledge to diagnose, so what I'll do is upload a version of the script with the logging so someone else can use it to solve the problem.

Next Steps

Creating a Github repo, obviously!

Making the code open source is an obvious decision. First of all, I've already posted tons of it here. Second, I'm more of a coder than a programmer - I can write code and I can definitely think of the logic, but I don't have the skill or experience to be called a programmer.

So if someone out there can figure out why the script is producing IP addresses out of nowhere and solve the problem, that's fine by me.

Additionally, I'll have to go back to OPNsense and create the full set of Aliases there, since user created Aliases get auto-generated hashes(?), like

"66ea4c00-feec-469e-9a82-bf5cba0405a9".